just to note i think there is still some work needed on the pullrequest, as i commented there on the github issue

Is this it? There is nothing more? Why this is called Omaha? I thought there are some more features.

Let’s put this into core then!

Well, actually the plugin implements the ohama protocol. There is quite a bit more to see. Unfortunately, the whole process now only works with Flatcar (or the original CoreOS).

I’d love to, the issue here is that shelling out to ct is actually a security risk. You can read files from disk in the foreman app user context. That’s not good.

Do you have an idea how to avoid this?

So the CT utility has some capability of reading files (e.g. including the content into the output) you say? What feature is this? (I am going to study docs now but if you can tell me.)

Hmm SELinux will stop most of it, but definitely not some sensitive Foreman files like /etc/foreman etc. This is still readable.

The best bet would be adding a flag into CT that would disable this feature.

Alternatively building a smart proxy service that would do this on a separate box. Then we have the same problem there but there we can tighten SELinux down quite hard to the degree that nothing is actually possible.

Please don’t say containers, containers do not contain. You need SELinux around them anyway… ![]()

Did you mean storage-files-contents-local ?

Wait I see the utility has a specific option now -d, --files-dir string allow embedding local files from this directory and files must be relative not absolute. Therefore this is probably safe now.

-

local (string): the path to a local file, relative to the

--files-dirdirectory. When using local files, the--files-dirflag must be passed toct. The file contents are included in the generated config.

What I mean is we can create a dedicated directory like /var/lib/foreman/ct and allow reading from this by SELinux. The utility will be always spawn with --files-dir /var/lib/foreman/ct so users can’t read beyond this directory.

Sure, feel free to steal these snippets and put them into core:

Thanks. Working on it. Do you also have some example template I could include in my PR (for Flatcar/Container)? Something we can start with and refine later on.

Yes. Here you go: https://gist.github.com/timogoebel/215d78ecbb6ee4a237b42b9b824add3b

Note, it’s quite opinionated. Blossom is something like your ufacter, but it can also retrieve certificates from a puppetCA. The omaha smart proxy code is required if you want to use the Omaha plugin for updates.

Thanks, I think I will keep the name as “CoreOS” as Flatcar is one of this kind. Similarly we have RHEL and CentOS, or Fedora and RHEL or Oracle EL. If there will be some differences we should probably follow similar pattern as we do for other provisioning template and do IF-ERB statements.

@TimoGoebel can you explain me this existing template:

Why this has #cloud-config line? Is this a bug? Ignition does not have this. Or is this actually cloud-init template?

The syntax seems to be quite different form what you have now.

Before the original CoreOS added support for Ignition, it used their own implementation of cloud-init that did not require any form of preprocessing: https://github.com/coreos/coreos-cloudinit

I believe our templates were never updated to support Ignition.

That’s what I thought, thanks ![]()

https://github.com/theforeman/foreman/pull/8042#pullrequestreview-503963129

i think here is something not working as expected, or i was not able to apply your commits right on foreman 2.1.3 but i get the var $arch unresolved when the vm boots up and so it is not able to find its kernel

and what i wanted to say, we got foreman to cover our use case without those patches,

with two workarounds:

- cancel the build after first power up to stop the install loop

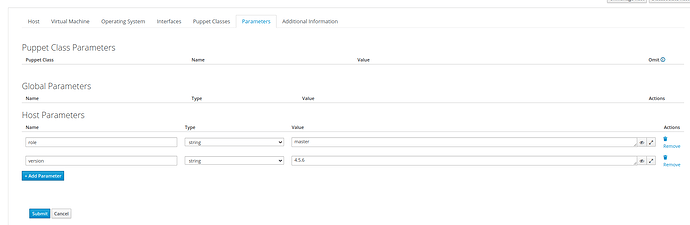

- a working openshift install depends now on host vars for the rhcos vm’s because of the pxe template we used:

DEFAULT pxeboot

TIMEOUT 20

PROMPT 0

LABEL pxeboot

KERNEL boot/rhcos-<%= @host.params['version'] %>-x86_64-installer-kernel-x86_64

APPEND ignition.platform.id=vmware console=tty0 console=ttyS0 ip=<%= @host.ip %>::<%= @host.subnet.gateway %>:<%= @host.subnet.mask %>:<%= @host.name %>:<%= @host.primary_interface.identifier %>:none rd.neednet=1 initrd=boot/rhcos-<%= @host.params['version'] %>-x86_64-installer-initramfs.x86_64.img nameserver=<%= @host.subnet.dns_primary %> nameserver=<%= @host.subnet.dns_secondary %> coreos.autologin=yes coreos.first_boot=1 coreos.inst=yes coreos.inst.callhome_url=<%= foreman_url('provision')%> coreos.inst.install_dev=sda coreos.inst.image_url=http://foremantest71.domain.com:8089/pxeboot/rhcos/rhcos-<%= @host.params['version'] %>-x86_64-metal.x86_64.raw.gz coreos.inst.ignition_url=http://foremantest71.domain.com:8089/pxeboot/rhcos/<%= @host.params['role'] %>.ign

because we provision our vm’s on foreman via ansible and we now there already which version we want and which node is a worker, master or boostrap node, its no problem to set those vars automated

because i did not get your first patch working it was necessary to download the initramfs,kernel,metal.gz in the right version via ansible to the foreman tftp // http directorys, doable, we did

what i also noticed is that:

<%= @host.primary_interface.identifier %>

does not give us the identifier of our primary interface (should be ens192) instead it gives us an empty var, maybe this is not accessable in a pxetemplate?

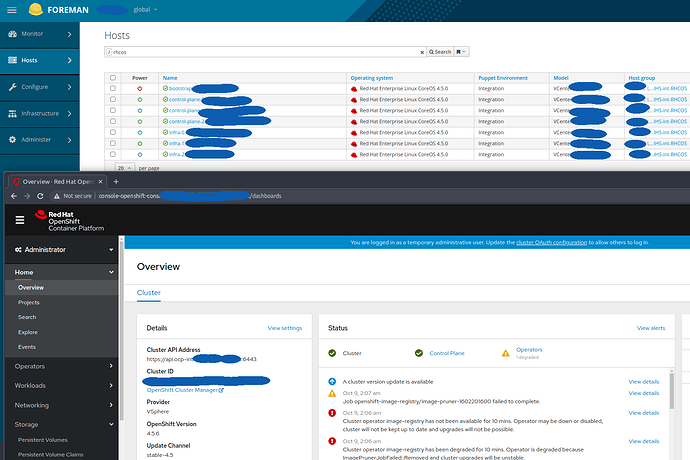

with that (and preexisting openshift requirements [dns,loadbalancers]e) we got a working cluster deployed on vmware via foreman and ansible:

with your first patch working, there a lot of ansible could be removed on our side

I fixed this, I’d appreciate retesting.

Nice but it’s ugly. Please help me with testing those patches so we can get this into 2.3 which is knocking the doors already! ![]()

the first patch is done testing in my opinion, it needs two small changes to get a working os/install media for fcos but then it should be good to go.

for the second patch i still need some time to patch and test

sorry, I’m and I was busy working at other company projects,

but what i noticed, openshift 4.6 released,

they changed their layout in the repo from installer to live…

which means this breaks with openshift 4.6

https://github.com/theforeman/foreman/pull/8042/files/28acca7bdc0731dfa9ddea95fa322e667bafe710#diff-a171b4db15dfa9c25b2a3d2ee9a720ebaed60cdc0e35d27f9922cf263ca13801

https://mirror.openshift.com/pub/openshift-v4/x86_64/dependencies/rhcos/4.6/4.6.1/rhcos-live-initramfs.x86_64.img

https://mirror.openshift.com/pub/openshift-v4/x86_64/dependencies/rhcos/4.6/4.6.1/rhcos-live-kernel-x86_64

i think its good that it was not merged yet