Lately I’ve started working on the console and the parts supporting it. A big stream of ideas rush in when I fantasize on what could be the next bits that we could add. I hope this could be the area where we can discuss possible other features, needs, hopes, dreams & aspirations.

So far, these are the topics I’m currently either working on or would love to get some feedback on wants & needs. Of course, as always, I’m open to discuss things I haven’t discussed in this introduction:

- VNC: removing VNC from javascript/vendors folder as to always have a chance to have the latest greatest (and IMO big plus = increase our coverage by the refactor)

- SPICE: the obvious runner-up, change from (outdated) ruby gem to npm package_, which probably requires a new package to consume the latest greatest code, however latest official release from the html5-spice team, however, is 2 years old, I’m trying to get into contact with that community to better understand what the status is of that project, how releases will roll out; etc, seems that there’s contribution from time-to-time_

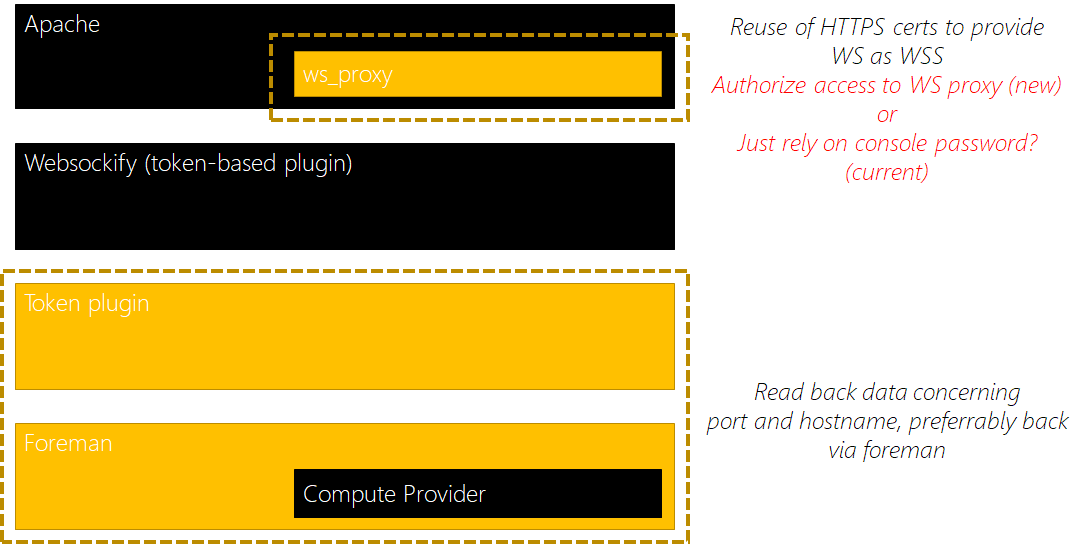

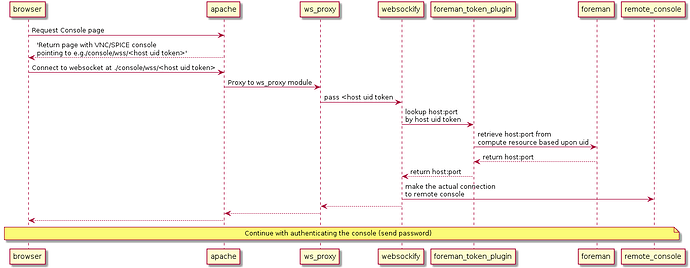

- websockify: currently several ports need to be open to access the console remotely, so far I get push-back from my IT to do this (not unreasonable IMO), even more, this leads to more work if we’re behind a firewall. Would be great if we’d adapt this to be behind a reverse proxy which passes through to websockify’s token based target selection. Think we’d get away from the port-selection process + the security-issues currently joining the current implementation.

Will draw out a more detailed design of this implementation, based on what (I think) I’ve learned so far - websockify: remove the code from javascript/vendors and move to a OS-package which provides this instead

- react: of course we’d like to refactor this further to a react-component,there’s already somebody working on a react console, perhaps this can be used for our needs as well

- SSH: if we have a console package, we could probably enhance it to also support plain-old SSH

- UX: would also like to discuss additional UX-improvement opportunities,

- “in your face”-kinda animations & logging to directly show what’s going on in terms of status and connectivity (…aka the creative fun stuff

) - I have some ideas here, so will probably adapt this with some mock-ups

) - I have some ideas here, so will probably adapt this with some mock-ups - automatically reconnect on connection loss

- copy-paste of clipboard content (as far as I can tell, NoVNC supports it)

- popping out the console as an overlay - again, will see to draw some mock-up

- possibility to support multi-monitor set-ups

- “in your face”-kinda animations & logging to directly show what’s going on in terms of status and connectivity (…aka the creative fun stuff

- General: would it also not be great if we had the possibility to configure if a server has support for VNC? Currently console is supported for a few compute resources, but some bare-metal systems provide a VNC endpoint as well (could be that this only works under very specific conditions, but it might be worth the further investigation)?

PS, this is my first post on RFC, not sure if I’m doing this to the expectation? Or should I create separate RFC’s for these? Some of these are probably direct action points that could be handled in the issue tracker instead; but as the title said, I wanted to consolidate anything I have in my head so far regarding the console