Problem:

Is there a way to set VM Configuration Parameters (e.g. disk.EnableUUID) for VMs created by Foreman on vSphere Compute Resources. I believe the VM is created through tfm-rubygem-fog-vsphere plugin. I am looking for some type of configuration or property file that could be modified. If the feature does not exist, maybe it could be added in a future release.

Expected outcome:

I am looking for some type of configuration or property file that could be modified.

Foreman and Proxy versions:

Foreman 1.22.0.33-1

Foreman and Proxy plugin versions:

tfm-rubygem-fog-vsphere 3.2.1-1

Distribution and version:

Red Hat Satellite 6.6.1 on RHEL 7.7

Other relevant data:

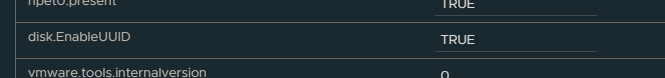

I’ve also hit this issue. I want to set disk.EnableUUID to true in advanced properties but can’t see a way to do it. There is an open issue for it but not updated in 3 years.

I have never looked at ruby before but had a search through this code and foreman. Maybe someone with more knowledge can look at this. It looks to me like a change is required in the fog-vsphere code before anything can be done in foreman

It looks like foreman:

def create_vm(args = { })

vm = nil

test_connection

return unless errors.empty?

args = parse_networks(args)

args = args.with_indifferent_access

if args[:provision_method] == 'image'

clone_vm(args)

else

vm = new_vm(args)

vm.firmware = 'bios' if vm.firmware == 'automatic'

**vm.save** - actually creates vm

end

def new_vm(args = {})

args = parse_args args

opts = vm_instance_defaults.symbolize_keys.merge(args.symbolize_keys).deep_symbolize_keys

**client.servers.new opts** - gets Fog::Vsphere::Compute::Server

end

- Gets the connection to the server with Fog::Compute::new (provider = vsphere)

- Uses this Fog::Vsphere::Compute to get Fog::Vsphere::Compute::Servers

In fog-vsphere:

- Uses new method to get an instance of Fog::Vsphere::Compute::Server to manipulate before creating the VM (vm.save)

- Uses save method to create the actual vm which calls create_vm method in

Now here is where it gets interesting. It looks like the create_vm method arguments has an option for these extra parameters called “extra_config” that is used exactly for this, adding custom parameters:

def create_vm(attributes = {})

...

vm_cfg = {

name: attributes[:name],

annotation: attributes[:annotation],

guestId: attributes[:guest_id],

version: attributes[:hardware_version],

files: { vmPathName: vm_path_name(attributes) },

numCPUs: attributes[:cpus],

numCoresPerSocket: attributes[:corespersocket],

memoryMB: attributes[:memory_mb],

deviceChange: device_change(attributes),

**extraConfig: extra_config(attributes)**

}

But the Fog::Vsphere::Compute::Server doesn’t have this attribute, and hence foreman can’t set it

If this attribute was available on the Fog::Vsphere::Compute::Server class I think foreman could set it and it would end up in the VM.

Can someone check if I am right, if so I can make a one-line PR in fog-vsphere so at least there is an option for foreman to implement something.

Cheers

server.extra_config = {"disk.EnableUUID" => "TRUE"}

result in VM

Hi, @dave93cab!

Awesome work, the PR is definitelly a great direction for the support of the parameter.

I’ll review and it should not be hard to get it in.

Although enabling it on the foreman side will be bit tricky, do we want the extra parameters to be defined and accept only specific attributes we allow, or do we want free form and let users to define these attributes?

I’d be up for the more rigid definition of the attributes and thus allowing only specific attributes, but it would be neccessary for us to allow each manually, though with the right model, it should be quite easy to add another attribute.

Since VMware has a lot of hidden parameters which are only used in very specific situations, maybe leaving this open might be a better option. It’s for advanced use anyway, correct?

I’m sure there are many esoteric uses for them so my gut feeling would be to leaving them open too

Our virtualization team wants an advanced vSphere param set at host creation: snapshot.alwaysAllowNative = TRUE.

Your PR @dave93cab seems to be a great step towards being able to set such params via Foreman.

Since you seem to have solved your disk.EnableUUID problem, could you point me in the right direction, where to start, if I´d like to pass such a parameter from Foreman to vSphere?

From what I´ve read so far, I´m guessing it is not yet possible to just add something like "extra_config": { "snapshot.alwaysAllowNative": "true" } to a host object passed to the Foreman API?

Any hints are much appreciated.

Hi, I’d like to user the disk.EnableUUID option but haven’t been able to figure out how to set it in the foreman?

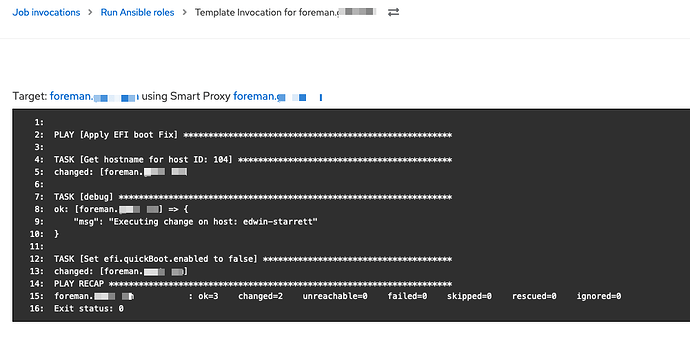

Curious about this as well. Is this feature exposed over the Foreman REST API, or accessible as a parameter under compute_attributes in the theforeman.foreman.host Ansible module? I’m looking to be able to set "efi.quickBoot.enabled" = "FALSE" for VMware virtual machines.

Ha! I see that you too are running into this bug Bug #33749: ESXi 7.0 U2 and later (VM version 19) EFI Boot Failure - Foreman ![]()

So yes, count us in for support in being able to specify these parameters through the Foreman/Satellite UI.

I am also interested! I could do this using ansible but that makes this whole process more complex that it needs to be.

- Build VM in Foreman/Satellite

- At post build, kick off an ansible job to set

"efi.quickBoot.enabled" = "FALSE"before the final boot is performed to prevent the boot issue happening mentioned in the above comment.

I guess this’ll do for now until a proper fix comes into play.

Work for is manual (meaning 1. Create the VM/Host in Foreman 2. Execute the job after) for now since I don’t provision a TON of vm’s in my lab but I may end up creating a small role to do both provisioning and apply this “fix” for now anyways. I can always post what I did somewhere if it helps someone else. Let me know.

The way I have this solved currently is by using Ansible to handle the creation of new hosts and powering up the system. This allows me to create the host, set the parameters as needed, then kick off the boot process.

The playbook I’m using:

---

- name: Provision new virtual host

hosts: all !localhost

gather_facts: false

vars_files:

- "creds.yml"

tasks:

- name: Check if host exists on all platforms

delegate_to: localhost

block:

- name: Check if host exists in Foreman

register: foreman_host_exists

theforeman.foreman.host_info:

server_url: "{{ foreman_url }}"

username: "{{ foreman_username }}"

password: "{{ foreman_password }}"

name: "{{ inventory_hostname }}.example.org"

- name: Check if host exists in vSphere

register: vsphere_host_exists

vmware.vmware_rest.vcenter_vm_info:

vcenter_hostname: "{{ vcenter_hostname }}"

vcenter_username: "{{ vcenter_username }}"

vcenter_password: "{{ vcenter_password }}"

vcenter_validate_certs: false

names: "{{ inventory_hostname }}"

- name: Create host

when:

- foreman_host_exists.host == None

- vsphere_host_exists.value | length == 0

delegate_to: localhost

block:

- name: Create host in Foreman

register: foreman_host

theforeman.foreman.host:

server_url: "{{ foreman_url }}"

username: "{{ foreman_username }}"

password: "{{ foreman_password }}"

organization: "example"

location: "{{ hostvars[inventory_hostname].location }}"

name: "{{ inventory_hostname }}.example.org"

hostgroup: "{{ hostvars[inventory_hostname].hostgroup }}"

compute_profile: "{{ hostvars[inventory_hostname].compute_profile }}"

build: true

parameters: "{{ hostvars[inventory_hostname].parameters | default(omit) }}"

- name: Configure VM in vCenter

community.vmware.vmware_guest:

hostname: "{{ vcenter_hostname }}"

username: "{{ vcenter_username }}"

password: "{{ vcenter_password }}"

validate_certs: false

datacenter: "{{ hostvars[inventory_hostname].datacenter }}"

name: "{{ inventory_hostname }}"

advanced_settings:

- key: "disk.EnableUUID"

value: "TRUE"

- key: "efi.quickBoot.enabled"

value: "FALSE"

- name: Add vGPU to VDI host

when: hostvars[inventory_hostname].vgpu_profile is defined

community.vmware.vmware_guest_vgpu:

hostname: "{{ vcenter_hostname }}"

username: "{{ vcenter_username }}"

password: "{{ vcenter_password }}"

validate_certs: false

datacenter: "{{ hostvars[inventory_hostname].datacenter }}"

name: "{{ inventory_hostname }}"

vgpu: "{{ hostvars[inventory_hostname].vgpu_profile }}"

- name: Power on host for provisioning

theforeman.foreman.host_power:

server_url: "{{ foreman_url }}"

username: "{{ foreman_username }}"

password: "{{ foreman_password }}"

hostname: "{{ inventory_hostname }}.example.org"

state: "on"

rescue:

- name: Remove host from Foreman

theforeman.foreman.host:

server_url: "{{ foreman_url }}"

username: "{{ foreman_username }}"

password: "{{ foreman_password }}"

name: "{{ inventory_hostname }}.example.org"

state: "absent"

- name: Check if host is in vSphere

register: vsphere_failed_host_exists

vmware.vmware_rest.vcenter_vm_info:

vcenter_hostname: "{{ vcenter_hostname }}"

vcenter_username: "{{ vcenter_username }}"

vcenter_password: "{{ vcenter_password }}"

vcenter_validate_certs: false

names: "{{ inventory_hostname }}"

- name: Remove host from vSphere

when: vsphere_failed_host_exists.value | length != 0

vmware.vmware_rest.vcenter_vm:

vcenter_hostname: "{{ vcenter_hostname }}"

vcenter_username: "{{ vcenter_username }}"

vcenter_password: "{{ vcenter_password }}"

vcenter_validate_certs: false

state: "absent"

vm: "{{ vsphere_failed_host_exists.value[0].vm }}"

Not sure if this helps at all, @moritzdietz and @metalcated (never saw the notifications for later comments). However, getting this all baked in directly to the Foreman infra and Ansible collection would be fantastic!

We’ve written a plugin at Linköping University to set additional VMX parameters - like disk.enableUUID, and attach additional devices - currently only vTPMs, if it’s of any help.

Thanks for both posts above, I am already using the VMware Advanced Plugin ![]() - Again though, thank you!!

- Again though, thank you!!