Problem:

Occasionally, we’ll have jobs from foreman return a 404 on their dynflow task, during a remote execution run. I can queue up a few jobs against the same proxy, and some will work, while others will go missing. During this time, I can see the 404’d job id in the dynflow console, usually in a waiting or running state.

Expected outcome:

The job executes and completes with any return.

Foreman and Proxy versions:

Foreman

[root@10-222-206-158 jsparrow]# rpm -qa | grep foreman

rubygem-hammer_cli_foreman_remote_execution-0.3.0-1.el9.noarch

rubygem-hammer_cli_foreman_ssh-0.0.3-1.el9.noarch

rubygem-foreman_vault-2.0.0-1.fm3_11.el9.noarch

rubygem-foreman_kubevirt-0.2.0-1.fm3_11.el9.noarch

rubygem-foreman_statistics-2.1.0-3.fm3_11.el9.noarch

rubygem-hammer_cli_foreman_kubevirt-0.2.0-1.fm3_11.el9.noarch

rubygem-hammer_cli_foreman_puppet-0.1.0-1.fm3_11.el9.noarch

rubygem-hammer_cli_foreman_tasks-0.0.21-1.fm3_11.el9.noarch

foreman-obsolete-packages-1.10-1.el9.noarch

foreman-release-3.13.0-1.el9.noarch

rubygem-hammer_cli_foreman-3.13.0-1.el9.noarch

foreman-selinux-3.13.0-1.el9.noarch

foreman-3.13.0-1.el9.noarch

rubygem-foreman-tasks-10.0.1-1.fm3_13.el9.noarch

rubygem-foreman_remote_execution-14.0.2-1.fm3_13.el9.noarch

rubygem-foreman_salt-17.0.0-1.fm3_13.el9.noarch

foreman-dynflow-sidekiq-3.13.0-1.el9.noarch

foreman-libvirt-3.13.0-1.el9.noarch

foreman-ovirt-3.13.0-1.el9.noarch

foreman-postgresql-3.13.0-1.el9.noarch

foreman-redis-3.13.0-1.el9.noarch

foreman-service-3.13.0-1.el9.noarch

foreman-vmware-3.13.0-1.el9.noarch

rubygem-foreman_puppet-8.0.0-1.fm3_13.el9.noarch

rubygem-foreman_templates-10.0.1-1.fm3_13.el9.noarch

foreman-cli-3.13.0-1.el9.noarch

foreman-installer-3.13.0-1.el9.noarch

foreman-proxy-3.13.0-1.el9.noarch

rubygem-foreman_maintain-1.8.1-2.el9.noarch

Proxy:

foreman-installer-3.11.2-1.el8.noarch

foreman-release-3.11.5-1.el8.noarch

rubygem-foreman_remote_execution-13.1.0-1.fm3_11.el8.noarch

rubygem-foreman_salt-16.0.3-2.fm3_11.el8.noarch

foreman-proxy-3.11.2-1.el8.noarch

foreman-selinux-3.11.2-1.el8.noarch

rubygem-foreman-tasks-9.1.1-1.fm3_11.el8.noarch

foreman-3.11.2-1.el8.noarch

Foreman and Proxy plugin versions:

See above.

Distribution and version:

Foreman:

AlmaLinux release 9.5 (Teal Serval)

NAME="AlmaLinux"

VERSION="9.5 (Teal Serval)"

ID="almalinux"

ID_LIKE="rhel centos fedora"

VERSION_ID="9.5"

PLATFORM_ID="platform:el9"

PRETTY_NAME="AlmaLinux 9.5 (Teal Serval)"

ANSI_COLOR="0;34"

LOGO="fedora-logo-icon"

CPE_NAME="cpe:/o:almalinux:almalinux:9::baseos"

HOME_URL="https://almalinux.org/"

DOCUMENTATION_URL="https://wiki.almalinux.org/"

BUG_REPORT_URL="https://bugs.almalinux.org/"

ALMALINUX_MANTISBT_PROJECT="AlmaLinux-9"

ALMALINUX_MANTISBT_PROJECT_VERSION="9.5"

REDHAT_SUPPORT_PRODUCT="AlmaLinux"

REDHAT_SUPPORT_PRODUCT_VERSION="9.5"

SUPPORT_END=2032-06-01

AlmaLinux release 9.5 (Teal Serval)

AlmaLinux release 9.5 (Teal Serval)

Proxy:

$ cat /etc/*release

AlmaLinux release 8.10 (Cerulean Leopard)

AlmaLinux release 8.10 (Cerulean Leopard)

NAME="AlmaLinux"

VERSION="8.10 (Cerulean Leopard)"

ID="almalinux"

ID_LIKE="rhel centos fedora"

VERSION_ID="8.10"

PLATFORM_ID="platform:el8"

PRETTY_NAME="AlmaLinux 8.10 (Cerulean Leopard)"

ANSI_COLOR="0;34"

LOGO="fedora-logo-icon"

CPE_NAME="cpe:/o:almalinux:almalinux:8::baseos"

HOME_URL="https://almalinux.org/"

DOCUMENTATION_URL="https://wiki.almalinux.org/"

BUG_REPORT_URL="https://bugs.almalinux.org/"

ALMALINUX_MANTISBT_PROJECT="AlmaLinux-8"

ALMALINUX_MANTISBT_PROJECT_VERSION="8.10"

REDHAT_SUPPORT_PRODUCT="AlmaLinux"

REDHAT_SUPPORT_PRODUCT_VERSION="8.10"

SUPPORT_END=2029-06-01

AlmaLinux release 8.10 (Cerulean Leopard)

AlmaLinux release 8.10 (Cerulean Leopard)

Other relevant data:

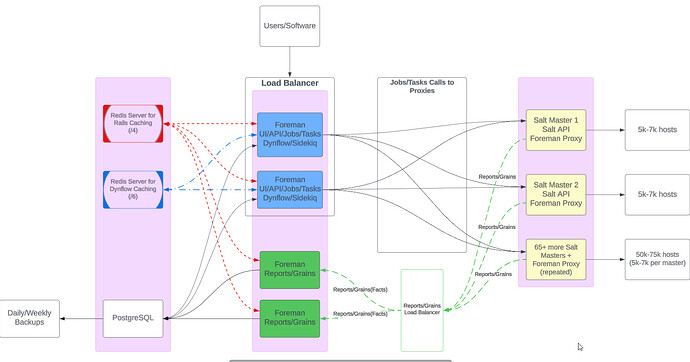

We only use jobs/tasks for salt calls to our masters.

Here are the logs of a job, with multiple tasks where some are successful and one in particular fails:

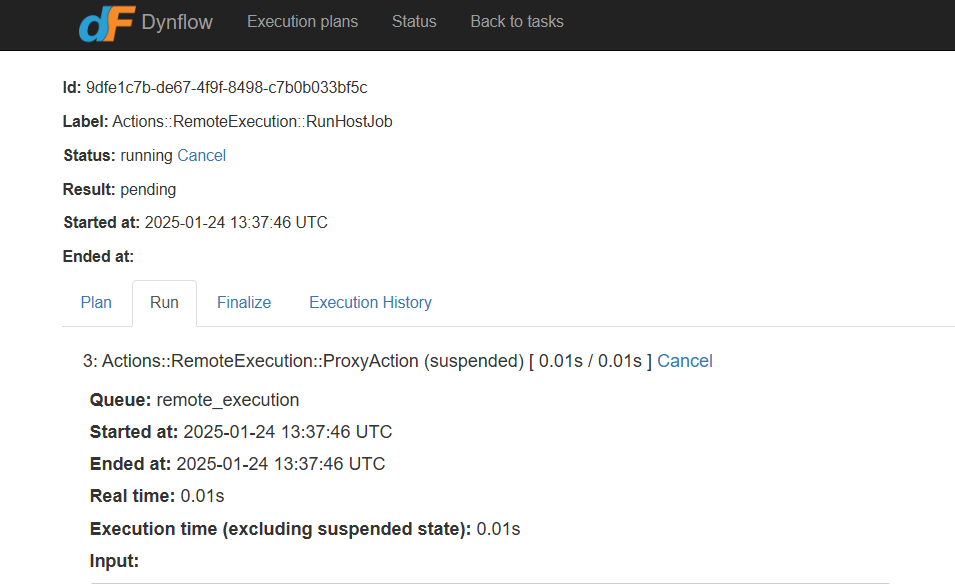

2025-01-24T13:41:04 776caca3 [I] Started GET /dynflow/tasks/9dfe1c7b-de67-4f9f-8498-c7b0b033bf5c/status

2025-01-24T13:41:04 776caca3 [I] Finished GET /dynflow/tasks/9dfe1c7b-de67-4f9f-8498-c7b0b033bf5c/status with 404 (2.52 ms)

2025-01-24T13:41:06 532c5c6c [I] Started GET /dynflow/tasks/9dfe1c7b-de67-4f9f-8498-c7b0b033bf5c/status

2025-01-24T13:41:06 532c5c6c [I] Finished GET /dynflow/tasks/9dfe1c7b-de67-4f9f-8498-c7b0b033bf5c/status with 404 (1.13 ms)

2025-01-24T13:41:07 a4b43d84 [I] Started GET /dynflow/tasks/9dfe1c7b-de67-4f9f-8498-c7b0b033bf5c/status

Dynflow:

And then after sometime:

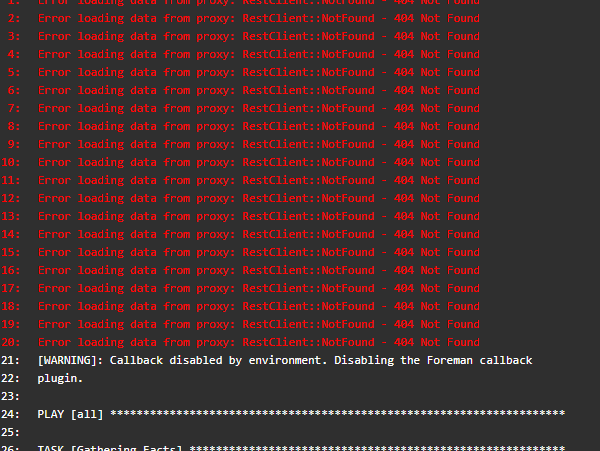

134:

Error loading data from proxy: RestClient::NotFound - 404 Not Found

135:

Error loading data from proxy: RestClient::NotFound - 404 Not Found

136:

Actions::ProxyAction::ProxyActionMissing: Proxy task gone missing from the smart proxy

Here are some more relevant logs from foreman and the proxy:

Foreman:

[root@10-222-206-158 jsparrow]# grep b1b11dff /var/log/foreman/production.log

2025-01-24T14:03:12 [I|app|b1b11dff] Started POST "/api/job_invocations" for 10.222.218.3 at 2025-01-24 14:03:12 +0000

2025-01-24T14:03:12 [I|app|b1b11dff] Processing by Api::V2::JobInvocationsController#create as JSON

2025-01-24T14:03:12 [I|app|b1b11dff] Parameters: {"job_invocation"=>{"job_template_id"=>172, "targeting_type"=>"static_query", "randomized_ordering"=>nil, "inputs"=>{"state"=>"ssnc-foreman-saltcontrol"}, "ssh_user"=>nil, "ssh"=>{"effective_user"=>"root"}, "recurrence"=>nil, "scheduling"=>nil, "concurrency_control"=>{"concurrency_level"=>"4"}, "bookmark_id"=>nil, "search_query"=>"name ^ (10-213-48-11.ssnc-corp.cloud, 10-222-203-207.ssnc-corp.cloud, 10-222-45-187.ssnc-corp.cloud, 10-234-32-23.ssnc-corp.cloud, 10-234-61-47.ssnc-corp.cloud)", "description_format"=>"Salt: state.apply", "execution_timeout_interval"=>500, "time_to_pickup"=>""}, "apiv"=>"v2"}

2025-01-24T14:03:12 [I|aud|b1b11dff] JobInvocation (515) create event on job_category Salt

2025-01-24T14:03:12 [I|aud|b1b11dff] JobInvocation (515) create event on description Salt: state.apply

2025-01-24T14:03:12 [I|aud|b1b11dff] JobInvocation (515) create event on concurrency_level 4

2025-01-24T14:03:12 [I|aud|b1b11dff] JobInvocation (515) create event on execution_timeout_interval 500

2025-01-24T14:03:12 [I|aud|b1b11dff] JobInvocation (515) create event on password [redacted]

2025-01-24T14:03:12 [I|aud|b1b11dff] JobInvocation (515) create event on key_passphrase

2025-01-24T14:03:12 [I|aud|b1b11dff] JobInvocation (515) create event on remote_execution_feature_id

2025-01-24T14:03:12 [I|aud|b1b11dff] JobInvocation (515) create event on effective_user_password

2025-01-24T14:03:12 [I|aud|b1b11dff] JobInvocation (515) create event on ssh_user

2025-01-24T14:03:12 [I|aud|b1b11dff] JobInvocation (515) create event on time_to_pickup

2025-01-24T14:03:12 [I|bac|b1b11dff] Task {label: , execution_plan_id: a8122b1d-8609-434f-865a-61e3437ea9d4} state changed: pending

2025-01-24T14:03:12 [I|bac|b1b11dff] Task {label: Actions::RemoteExecution::RunHostsJob, id: 4743b44c-b39c-4a14-8695-c18f3283a17c, execution_plan_id: a8122b1d-8609-434f-865a-61e3437ea9d4} state changed: planning

2025-01-24T14:03:12 [I|bac|b1b11dff] Task {label: Actions::RemoteExecution::RunHostsJob, id: 4743b44c-b39c-4a14-8695-c18f3283a17c, execution_plan_id: a8122b1d-8609-434f-865a-61e3437ea9d4} state changed: planned

2025-01-24T14:03:12 [I|bac|b1b11dff] Task {label: Actions::RemoteExecution::RunHostsJob, id: 4743b44c-b39c-4a14-8695-c18f3283a17c, execution_plan_id: a8122b1d-8609-434f-865a-61e3437ea9d4} state changed: running

2025-01-24T14:03:12 [I|app|b1b11dff] Rendered /usr/share/gems/gems/foreman_remote_execution-14.0.2/app/views/api/v2/job_invocations/create.json.rabl (Duration: 25.7ms | Allocations: 12859)

2025-01-24T14:03:12 [I|app|b1b11dff] Completed 201 Created in 266ms (Views: 27.6ms | ActiveRecord: 61.9ms | Allocations: 81674)

2025-01-24T14:03:12 [I|bac|b1b11dff] Task {label: , execution_plan_id: 1b2166d4-ea1c-4481-a77a-b62f8ad6e217} state changed: pending

2025-01-24T14:03:12 [I|bac|b1b11dff] Task {label: Actions::RemoteExecution::RunHostJob, id: 93ea47de-d47c-4ee8-8d55-c508156214a0, execution_plan_id: 1b2166d4-ea1c-4481-a77a-b62f8ad6e217} state changed: planning

2025-01-24T14:03:12 [I|bac|b1b11dff] Task {label: Actions::RemoteExecution::RunHostJob, id: 93ea47de-d47c-4ee8-8d55-c508156214a0, execution_plan_id: 1b2166d4-ea1c-4481-a77a-b62f8ad6e217} state changed: planned

2025-01-24T14:03:12 [I|bac|b1b11dff] Task {label: , execution_plan_id: d4e4d679-29e6-445c-b115-cd077f624b79} state changed: pending

2025-01-24T14:03:12 [I|bac|b1b11dff] Task {label: Actions::RemoteExecution::RunHostJob, id: 3a57096c-7f6a-492f-ad2d-9e1204c759de, execution_plan_id: d4e4d679-29e6-445c-b115-cd077f624b79} state changed: planning

2025-01-24T14:03:12 [I|bac|b1b11dff] Task {label: Actions::RemoteExecution::RunHostJob, id: 93ea47de-d47c-4ee8-8d55-c508156214a0, execution_plan_id: 1b2166d4-ea1c-4481-a77a-b62f8ad6e217} state changed: running

2025-01-24T14:03:13 [I|bac|b1b11dff] Task {label: Actions::RemoteExecution::RunHostJob, id: 3a57096c-7f6a-492f-ad2d-9e1204c759de, execution_plan_id: d4e4d679-29e6-445c-b115-cd077f624b79} state changed: planned

2025-01-24T14:03:13 [I|bac|b1b11dff] Task {label: , execution_plan_id: 1ba6a87a-872a-4f0d-b2a8-808a4e429e3a} state changed: pending

2025-01-24T14:03:13 [I|bac|b1b11dff] Task {label: Actions::RemoteExecution::RunHostJob, id: 3a57096c-7f6a-492f-ad2d-9e1204c759de, execution_plan_id: d4e4d679-29e6-445c-b115-cd077f624b79} state changed: running

2025-01-24T14:03:13 [I|bac|b1b11dff] Task {label: Actions::RemoteExecution::RunHostJob, id: 41523cc0-3a00-495f-bcb7-94a8c28d5dfa, execution_plan_id: 1ba6a87a-872a-4f0d-b2a8-808a4e429e3a} state changed: planning

2025-01-24T14:03:13 [I|bac|b1b11dff] Task {label: Actions::RemoteExecution::RunHostJob, id: 41523cc0-3a00-495f-bcb7-94a8c28d5dfa, execution_plan_id: 1ba6a87a-872a-4f0d-b2a8-808a4e429e3a} state changed: planned

2025-01-24T14:03:13 [I|bac|b1b11dff] Task {label: , execution_plan_id: ac996ea6-3236-4790-9d4a-8ca3f4ef9d01} state changed: pending

2025-01-24T14:03:13 [I|bac|b1b11dff] Task {label: Actions::RemoteExecution::RunHostJob, id: 41523cc0-3a00-495f-bcb7-94a8c28d5dfa, execution_plan_id: 1ba6a87a-872a-4f0d-b2a8-808a4e429e3a} state changed: running

2025-01-24T14:03:13 [I|bac|b1b11dff] Task {label: Actions::RemoteExecution::RunHostJob, id: fe025b84-a951-4f90-beef-97c4e9fec79b, execution_plan_id: ac996ea6-3236-4790-9d4a-8ca3f4ef9d01} state changed: planning

2025-01-24T14:03:13 [I|bac|b1b11dff] Task {label: Actions::RemoteExecution::RunHostJob, id: fe025b84-a951-4f90-beef-97c4e9fec79b, execution_plan_id: ac996ea6-3236-4790-9d4a-8ca3f4ef9d01} state changed: planned

2025-01-24T14:03:13 [I|bac|b1b11dff] Task {label: Actions::RemoteExecution::RunHostJob, id: fe025b84-a951-4f90-beef-97c4e9fec79b, execution_plan_id: ac996ea6-3236-4790-9d4a-8ca3f4ef9d01} state changed: running

2025-01-24T14:03:16 [I|bac|b1b11dff] Event delivered by request 5d607c96-0f2f-471d-bf09-da8b2887b0c9

2025-01-24T14:03:16 [I|bac|b1b11dff] Task {label: Actions::RemoteExecution::RunHostJob, id: fe025b84-a951-4f90-beef-97c4e9fec79b, execution_plan_id: ac996ea6-3236-4790-9d4a-8ca3f4ef9d01} state changed: stopped result: error

2025-01-24T14:03:28 [I|bac|b1b11dff] Task {label: , execution_plan_id: 6268c0e3-d477-45be-a2c8-e9e7b0f11dd1} state changed: pending

2025-01-24T14:03:28 [I|bac|b1b11dff] Task {label: Actions::RemoteExecution::RunHostJob, id: 8b05b98a-f672-4581-8711-12b045790498, execution_plan_id: 6268c0e3-d477-45be-a2c8-e9e7b0f11dd1} state changed: planning

2025-01-24T14:03:28 [I|bac|b1b11dff] Task {label: Actions::RemoteExecution::RunHostJob, id: 8b05b98a-f672-4581-8711-12b045790498, execution_plan_id: 6268c0e3-d477-45be-a2c8-e9e7b0f11dd1} state changed: planned

2025-01-24T14:03:28 [I|bac|b1b11dff] Task {label: Actions::RemoteExecution::RunHostJob, id: 8b05b98a-f672-4581-8711-12b045790498, execution_plan_id: 6268c0e3-d477-45be-a2c8-e9e7b0f11dd1} state changed: running

2025-01-24T14:03:30 [E|bac|b1b11dff] Job execution failed

2025-01-24T14:03:31 [I|bac|b1b11dff] Task {label: Actions::RemoteExecution::RunHostJob, id: 8b05b98a-f672-4581-8711-12b045790498, execution_plan_id: 6268c0e3-d477-45be-a2c8-e9e7b0f11dd1} state changed: stopped result: error

2025-01-24T14:03:40 [I|bac|b1b11dff] Event delivered by request dc0cf7db-77c5-4f27-b30f-bc1640579a2c

2025-01-24T14:03:40 [E|bac|b1b11dff] Job execution failed

2025-01-24T14:03:40 [I|bac|b1b11dff] Task {label: Actions::RemoteExecution::RunHostJob, id: 93ea47de-d47c-4ee8-8d55-c508156214a0, execution_plan_id: 1b2166d4-ea1c-4481-a77a-b62f8ad6e217} state changed: stopped result: error

2025-01-24T14:13:12 [E|bac|b1b11dff] Proxy task gone missing from the smart proxy (Actions::ProxyAction::ProxyActionMissing)

b1b11dff |

2025-01-24T14:13:12 [I|bac|b1b11dff] Task {label: Actions::RemoteExecution::RunHostJob, id: 3a57096c-7f6a-492f-ad2d-9e1204c759de, execution_plan_id: d4e4d679-29e6-445c-b115-cd077f624b79} state changed: stopped result: error

2025-01-24T14:13:12 [I|bac|b1b11dff] Task {label: Actions::RemoteExecution::RunHostJob, id: 41523cc0-3a00-495f-bcb7-94a8c28d5dfa, execution_plan_id: 1ba6a87a-872a-4f0d-b2a8-808a4e429e3a} state changed: stopped result: error

2025-01-24T14:13:14 [E|bac|b1b11dff] A sub task failed (Dynflow::Action::V2::WithSubPlans::SubtaskFailedException)

b1b11dff |

2025-01-24T14:13:14 [I|bac|b1b11dff] Task {label: Actions::RemoteExecution::RunHostsJob, id: 4743b44c-b39c-4a14-8695-c18f3283a17c, execution_plan_id: a8122b1d-8609-434f-865a-61e3437ea9d4} state changed: stopped result: warning

Proxy:

root@10-222-45-187:kc-saltmom-01:[/etc/foreman-proxy/settings.d]:

$ grep d4e4d679-29e6-445c-b115-cd077f624b79 /var/log/foreman-proxy/proxy.log

2025-01-24T14:11:11 1ba135ab [I] Started GET /dynflow/tasks/d4e4d679-29e6-445c-b115-cd077f624b79/status

2025-01-24T14:11:11 1ba135ab [I] Finished GET /dynflow/tasks/d4e4d679-29e6-445c-b115-cd077f624b79/status with 404 (2.47 ms)

2025-01-24T14:11:13 cc59fd2a [I] Started GET /dynflow/tasks/d4e4d679-29e6-445c-b115-cd077f624b79/status

2025-01-24T14:11:13 cc59fd2a [I] Finished GET /dynflow/tasks/d4e4d679-29e6-445c-b115-cd077f624b79/status with 404 (1.17 ms)

2025-01-24T14:11:14 8189b618 [I] Started GET /dynflow/tasks/d4e4d679-29e6-445c-b115-cd077f624b79/status

2025-01-24T14:11:14 8189b618 [I] Finished GET /dynflow/tasks/d4e4d679-29e6-445c-b115-cd077f624b79/status with 404 (1.34 ms)

2025-01-24T14:11:16 41cfd991 [I] Started GET /dynflow/tasks/d4e4d679-29e6-445c-b115-cd077f624b79/status

2025-01-24T14:11:16 41cfd991 [I] Finished GET /dynflow/tasks/d4e4d679-29e6-445c-b115-cd077f624b79/status with 404 (1.09 ms)

2025-01-24T14:11:18 bc8cc65a [I] Started GET /dynflow/tasks/d4e4d679-29e6-445c-b115-cd077f624b79/status

2025-01-24T14:11:18 bc8cc65a [I] Finished GET /dynflow/tasks/d4e4d679-29e6-445c-b115-cd077f624b79/status with 404 (1.17 ms)

repeated....