Problem: Memory usage of Foreman 2.5.4 reaches sometimes very high values for Puma workers, somewhere between ~5GB and up to ~10GB.

Foreman and Proxy versions: Foreman + Smart Proxies are on 2.5.4

Distribution and version: AlmaLinux 8.5, happens also on CentOS Linux 7.9

Other relevant data:

Hi,

I am seeing sometimes very high memory usage for Puma workers.

From the usual ~500-600MB, sometimes this peaks up to ~5GB or the record so far ~10GB:

[root@it-puppet-tfm01 ~]# foreman-puma-status

979 (/usr/share/foreman/tmp/puma.state) Uptime: 1d 1h | Phase: 0 | Load: 1[█░░░░░░░░░░░░░░░░░░░░░░░░░░░░░]30 | Req: 38463

└ 2129 CPU: 0.0% Mem: 381 MB Uptime: 1d 1h | Load: 0[░░░░░]5 | Req: 8204

└ 2132 CPU: 0.0% Mem: 5324 MB Uptime: 1d 1h | Load: 0[░░░░░]5 | Req: 6715

└ 2136 CPU: 0.0% Mem: 5427 MB Uptime: 1d 1h | Load: 0[░░░░░]5 | Req: 6921

└ 2144 CPU: 0.0% Mem: 328 MB Uptime: 1d 1h | Load: 0[░░░░░]5 | Req: 8488

└ 2151 CPU: 13.0% Mem: 432 MB Uptime: 1d 1h | Load: 0[░░░░░]5 | Req: 7877

└ 101124 CPU: 6.0% Mem: 420 MB Uptime: 5m45s | Load: 1[█░░░░]5 | Req: 258

or here, from the CentOS Linux 7 instance:

[root@it-puppet-infra02 ~]# foreman-puma-status

2121 (/usr/share/foreman/tmp/puma.state) Uptime: 11d | Phase: 0 | Load: 0[░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░]60 | Req: 648495

└ 41400 CPU: 40.0% Mem: 10240 MB Uptime: 2d23h | Load: 0[░░░░░]5 | Req: 156682

└ 41487 CPU: 0.0% Mem: 476 MB Uptime: 2d23h | Load: 0[░░░░░]5 | Req: 60629

└ 2432 CPU: 0.0% Mem: 5632 MB Uptime: 1d 0h | Load: 0[░░░░░]5 | Req: 57049

└ 2444 CPU: 0.0% Mem: 8704 MB Uptime: 1d 0h | Load: 0[░░░░░]5 | Req: 56561

└ 2450 CPU: 0.0% Mem: 10547 MB Uptime: 1d 0h | Load: 0[░░░░░]5 | Req: 52630

└ 2508 CPU: 0.0% Mem: 408 MB Uptime: 1d 0h | Load: 0[░░░░░]5 | Req: 19926

└ 2514 CPU: 0.0% Mem: 5836 MB Uptime: 1d 0h | Load: 0[░░░░░]5 | Req: 46749

└ 2838 CPU: 0.0% Mem: 5632 MB Uptime: 1d 0h | Load: 0[░░░░░]5 | Req: 17305

└ 26121 CPU: 0.0% Mem: 619 MB Uptime: 23h12m | Load: 0[░░░░░]5 | Req: 57244

└ 27357 CPU: 0.0% Mem: 698 MB Uptime: 23h 6m | Load: 0[░░░░░]5 | Req: 53648

└ 27513 CPU: 0.0% Mem: 693 MB Uptime: 23h 6m | Load: 0[░░░░░]5 | Req: 57199

└ 30274 CPU: 0.0% Mem: 530 MB Uptime: 22h55m | Load: 0[░░░░░]5 | Req: 12873

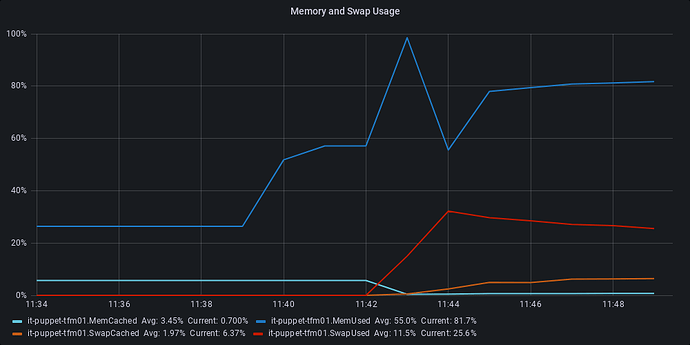

From the memory plot, it goes up in steps:

I am seeing this issue on both CentOS Linux 7.9 and AlmaLinux 8.5, so this seems to be independent of the operating system.

I tried to grep for high Allocations, most of the time it seems to be overview URL, which correlates to the time of the memory usage peak:

[root@it-puppet-tfm01 ~]# grep 00e8ff05 /var/log/foreman/production.log

2021-12-03T11:40:22 [I|app|00e8ff05] Started GET "/hosts/hasfcons14.desy.de/overview" for xxx.xxx.xxx.xxx at 2021-12-03 11:40:22 +0100

2021-12-03T11:40:22 [I|app|00e8ff05] Processing by HostsController#overview as HTML

2021-12-03T11:40:22 [I|app|00e8ff05] Parameters: {"id"=>"hasfcons14.desy.de"}

2021-12-03T11:43:07 [I|app|00e8ff05] Rendered hosts/_overview.html.erb (Duration: 165212.0ms | Allocations: 23968960)

2021-12-03T11:43:07 [I|app|00e8ff05] Completed 200 OK in 165247ms (Views: 157367.8ms | ActiveRecord: 7852.8ms | Allocations: 23976488)

[root@it-puppet-tfm01 ~]# grep 6cba0518 /var/log/foreman/production.log

2021-12-03T11:43:06 [I|app|6cba0518] Started GET "/hosts/batch1301.desy.de/overview" for xxx.xxx.xxx.xxx at 2021-12-03 11:43:06 +0100

2021-12-03T11:43:06 [I|app|6cba0518] Processing by HostsController#overview as HTML

2021-12-03T11:43:06 [I|app|6cba0518] Parameters: {"id"=>"batch1301.desy.de"}

2021-12-03T11:45:50 [I|app|6cba0518] Rendered hosts/_overview.html.erb (Duration: 164198.7ms | Allocations: 23226182)

2021-12-03T11:45:50 [I|app|6cba0518] Completed 200 OK in 164251ms (Views: 157231.9ms | ActiveRecord: 6989.2ms | Allocations: 23241033)

[root@it-puppet-tfm01 ~]# grep 16974d1d /var/log/foreman/production.log

2021-12-03T11:44:43 [I|app|16974d1d] Started GET "/hosts/batch1302.desy.de/overview" for xxx.xxx.xxx.xxx at 2021-12-03 11:44:43 +0100

2021-12-03T11:44:43 [I|app|16974d1d] Processing by HostsController#overview as HTML

2021-12-03T11:44:43 [I|app|16974d1d] Parameters: {"id"=>"batch1302.desy.de"}

2021-12-03T11:47:26 [I|app|16974d1d] Rendered hosts/_overview.html.erb (Duration: 162800.0ms | Allocations: 23383184)

2021-12-03T11:47:26 [I|app|16974d1d] Completed 200 OK in 162866ms (Views: 148853.1ms | ActiveRecord: 13968.1ms | Allocations: 23391034)

I had a look at those hosts, they do not have many NICs or parameters configured, which would explain this.

The Foreman instance is rather plain, aside from the PuppetDB Plugin, no plugins are installed. Multiple Puppet Servers use this instance as an ENC, and upload facts/reports. Overall ~6100 nodes are inside this instance.

It looks a bit like this issue 33585, but parameters are only rarely used.

I can not really point out the exact time, when this issue started to appear.

From the historic memory plots, the issue started to appear after upgrading from Foreman 2.5.0 → 2.5.2. Later it has been upgraded from 2.5.2 → 2.5.4, but this did not change anything wrt. memory consumption.

Does anybody have some ideas how to debug this further? I am planning to setup the StatsD to Prometheus to get some additional metrics.

Regards,

Stefan