Thanks for the error and ram usage info @John_Beranek, I’ll ask the Pulp team about it.

@John_Beranek do you find that your baseline RAM usage (not during syncing) decreases (even temporarily) if you restart Katello or Pulpcore services? systemctl restart pulpcore* --all, particularly for Pulpcore.

The Pulp team is going to start looking into the performance issues with the OL repositories soon.

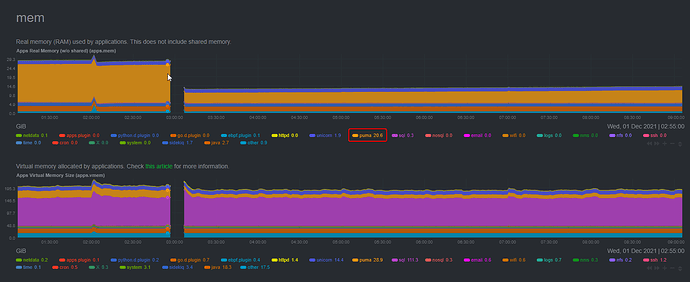

Although…looking at the Katello server this morning…

foreman 930281 0.0 0.8 827968 281196 ? Ssl Nov21 2:11 puma 5.3.2 (unix:///run/foreman.sock) [foreman]

foreman 930977 0.6 1.5 1109044 534964 ? Sl Nov21 27:34 puma: cluster worker 0: 930281 [foreman]

foreman 930978 0.5 1.5 1046404 524068 ? Sl Nov21 24:45 puma: cluster worker 1: 930281 [foreman]

foreman 930979 0.6 1.5 1160408 534444 ? Sl Nov21 27:40 puma: cluster worker 2: 930281 [foreman]

foreman 930983 0.6 1.5 1106172 533892 ? Sl Nov21 27:45 puma: cluster worker 3: 930281 [foreman]

foreman 930991 0.5 1.5 1137104 550864 ? Sl Nov21 22:58 puma: cluster worker 4: 930281 [foreman]

foreman 931001 0.6 1.5 1152152 547760 ? Sl Nov21 26:16 puma: cluster worker 5: 930281 [foreman]

foreman 931007 0.6 1.5 1064404 550272 ? Sl Nov21 26:07 puma: cluster worker 6: 930281 [foreman]

foreman 931013 1.6 44.3 16621128 15350872 ? Sl Nov21 69:33 puma: cluster worker 7: 930281 [foreman]

foreman 931016 0.5 1.5 1080860 535256 ? Sl Nov21 22:26 puma: cluster worker 8: 930281 [foreman]

foreman 931021 0.5 1.6 1222988 558556 ? Sl Nov21 24:41 puma: cluster worker 9: 930281 [foreman]

foreman 931026 0.4 1.5 1194788 547252 ? Sl Nov21 21:30 puma: cluster worker 10: 930281 [foreman]

foreman 931033 0.6 1.5 1119580 542744 ? Sl Nov21 27:52 puma: cluster worker 11: 930281 [foreman]

One worker using 44% of the server’s RAM, 15GiB? (When there are no running tasks)

@John_Beranek regarding the puma worker that’s using a lot of memory, can you check this from your server via foreman-rake? Katello::EventDaemon::Runner.pid ? Let me know if the PID it reports matches the high memory puma worker on your system.

Yes, it matches - after a week (Edit: I say a week, but it looks like the worker restarted yesterday):

PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND

3026536 foreman 20 0 9079132 7.8g 11196 S 4.3 23.7 30:06.60 rails

# foreman-rake console

Loading production environment (Rails 6.0.3.7)

irb(main):001:0> Katello::EventDaemon::Runner.pid

=> 3026536

irb(main):002:0>

Thank you. Next time you see the memory going high please share the output of hammer ping - especially the *_events parts. Maybe I can tell which event handler is causing the high usage

So, unfortunately I didn’t catch it before another OOM-kill event during this morning’s daily sync, I’ll try to catch it in the next few days…

(Gap in netdata stats as the OOM-killer decided to kill netdata first)

Jonathon, can you explain me why the process is named just rails? Puma processes should be always named puma since puma app server calls setproctitle to modify process title.

I am trying to identify all processes we have on Katello deployment:

I believe it should be pretty easy for Katello Event Daemon to set process title to something reasonable.

OK, so I have a puma process which has reached 11GiB resident, and:

# hammer ping

database:

Status: ok

Server Response: Duration: 0ms

katello_agent:

Status: FAIL

message: Not running

Server Response: Duration: 0ms

candlepin:

Status: ok

Server Response: Duration: 17ms

candlepin_auth:

Status: ok

Server Response: Duration: 17ms

candlepin_events:

Status: ok

message: 57949 Processed, 0 Failed

Server Response: Duration: 0ms

katello_events:

Status: ok

message: 40 Processed, 0 Failed

Server Response: Duration: 0ms

pulp3:

Status: ok

Server Response: Duration: 68ms

foreman_tasks:

Status: ok

Server Response: Duration: 36ms

Can you please share once again full list of puma processes and output of this command (the PID of the event daemon)?

# foreman-rake console

Loading production environment (Rails 6.0.3.7)

irb(main):001:0> Katello::EventDaemon::Runner.pid

=> 1805266

# ps auxww|grep puma

root 606352 0.0 0.0 221924 1052 pts/0 S+ 10:37 0:00 grep --color=auto puma

foreman 1804501 0.0 0.6 828500 229288 ? Ssl Nov24 6:37 puma 5.3.2 (unix:///run/foreman.sock) [foreman]

foreman 1805234 0.6 1.5 1207352 545512 ? Sl Nov24 86:07 puma: cluster worker 0: 1804501 [foreman]

foreman 1805242 0.6 1.5 1101376 537516 ? Sl Nov24 87:50 puma: cluster worker 2: 1804501 [foreman]

foreman 1805248 0.5 1.6 1208756 560996 ? Sl Nov24 73:39 puma: cluster worker 3: 1804501 [foreman]

foreman 1805254 0.7 1.6 1179856 556028 ? Sl Nov24 93:12 puma: cluster worker 4: 1804501 [foreman]

foreman 1805260 0.7 1.5 1128044 552488 ? Sl Nov24 90:49 puma: cluster worker 5: 1804501 [foreman]

foreman 1805266 0.8 34.4 13024232 11916008 ? Sl Nov24 110:08 puma: cluster worker 6: 1804501 [foreman]

foreman 1805269 0.7 1.6 1087216 554368 ? Sl Nov24 92:15 puma: cluster worker 7: 1804501 [foreman]

foreman 1805275 0.6 1.6 1219604 576884 ? Sl Nov24 88:13 puma: cluster worker 8: 1804501 [foreman]

foreman 1805277 0.7 1.6 1202848 558220 ? Sl Nov24 95:13 puma: cluster worker 9: 1804501 [foreman]

foreman 1805283 0.6 1.5 1105260 551788 ? Sl Nov24 81:42 puma: cluster worker 10: 1804501 [foreman]

foreman 1805287 0.7 1.6 1196852 558356 ? Sl Nov24 92:54 puma: cluster worker 11: 1804501 [foreman]

foreman 4036611 0.5 1.5 1140960 546892 ? Sl Dec01 19:13 puma: cluster worker 1: 1804501 [foreman]

Can you tell what made the worker no 1 to restart? Did you kill it? Because puma, by default, does not recycle processes. Was it a OOM killer?

On the same day, but not the same hour…

# journalctl --no-hostname -S "7 days ago" |fgrep "Out of memory"

Nov 28 02:00:46 kernel: Out of memory: Killed process 1710529 (ebpf.plugin) total-vm:394528kB, anon-rss:153800kB, file-rss:0kB, shmem-rss:560kB, UID:987 pgtables:604kB oom_score_adj:1000

Nov 28 02:01:34 kernel: Out of memory: Killed process 1710320 (netdata) total-vm:243972kB, anon-rss:120264kB, file-rss:0kB, shmem-rss:560kB, UID:987 pgtables:412kB oom_score_adj:1000

Dec 01 03:03:12 kernel: Out of memory: Killed process 3026521 (netdata) total-vm:237880kB, anon-rss:121628kB, file-rss:0kB, shmem-rss:560kB, UID:987 pgtables:400kB oom_score_adj:1000

Dec 01 03:04:23 kernel: Out of memory: Killed process 4035985 (netdata) total-vm:168932kB, anon-rss:77416kB, file-rss:0kB, shmem-rss:556kB, UID:987 pgtables:264kB oom_score_adj:1000

Dec 01 03:04:23 kernel: Out of memory: Killed process 3026536 (rails) total-vm:16762388kB, anon-rss:15458220kB, file-rss:0kB, shmem-rss:0kB, UID:994 pgtables:32396kB oom_score_adj:0

[Edit: In fact, the killed PID above matches the last time I provided the EventDaemon’s PID.]

So, it’s only just ‘rails’ when the kernel’s OOM-killer comes along - presumably the kernel wants to only output the original process name, and not the dynamically set one?

What we see (one of the worker processes - selected randomly?) is handling the events feels weird to me. I’ve created a lengthy post about this:

That is a larger problem on its own, this is why I created separate post. I will let Katello devs to investigate and help you in this one.

@Jonathon_Turel remember we have a nice telemetry API in Foreman core which is not utilized at all for Katello events. It is extremely easy to add statements to monitor amount of events, events per minute, length of queues and other important metrics which could help.

What would be also possible to call Ruby VM stat before and after each event is processed and make a reading of amount of allocated objects. This can be reported per event and easily aggregated later.

Here is a reminder to use our telemetry API for such information: Foreman telemetry API for developers

Yes, title set via setproctitle are only read by some tools like ps or top.

I noticed that katello_agent is FAIL in your hammer ping output. Do you use katello agent in your infrastructure? If not, you should run the installer with --foreman-proxy-content-enable-katello-agent=false

We have no installations of Katello agent, and yet Constant qpidd errors in journal

Hi, I just found this topic,

I face the same problem as John, such as those errors when syncing :

Nov 17 09:17:41 katello.example.com pulpcore-worker-7[3775518]: django.db.models.deletion.ProtectedError: ("Cannot delete some instances of model 'ReservedResource' because they are referenced through a protected foreign key: 'TaskReservedResource.resource'",>

Nov 17 09:17:42 katello.example.com pulpcore-api[3277945]: pulp [89e5995c-3b2b-437a-b367-4faf9c981c39]: - - [17/Nov/2021:09:17:42 +0000] "GET /pulp/api/v3/tasks/039e13b0-5a99-4f8c-9906-14818f48a8d6/ HTTP/1.1" 200 1411 "-" "OpenAPI-Generator/3.14.1/ruby"

The same hammer ping result with “katello_agent” as failed even though we’ve never used katello agent, and the same qpidd errors in the journal.

This message helped me Oracle Linux 8 Appstream sync fails - #9 by John_Beranek

But sadly I think you already know about it

I suspect that you and the OP have upgraded from older version of Katello where the Katello Agent infrastructure is enabled by default. New installations do not do so. If you aren’t using it then I absolutely recommend running the installer as I mentioned to remove all traces of Katello Agent from your server.

That installer option is --foreman-proxy-content-enable-katello-agent=false