This is a AI summary of 6 days of debugging an issue with Katello.

To be fair, we have a very unique Katello setup, although its I dont think it would be unheard of for others out there to set up something similar to us.

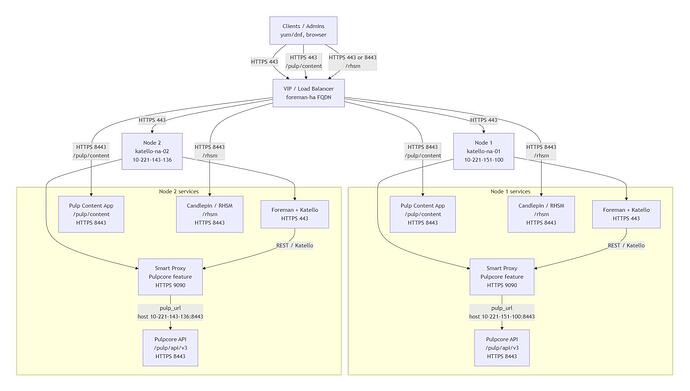

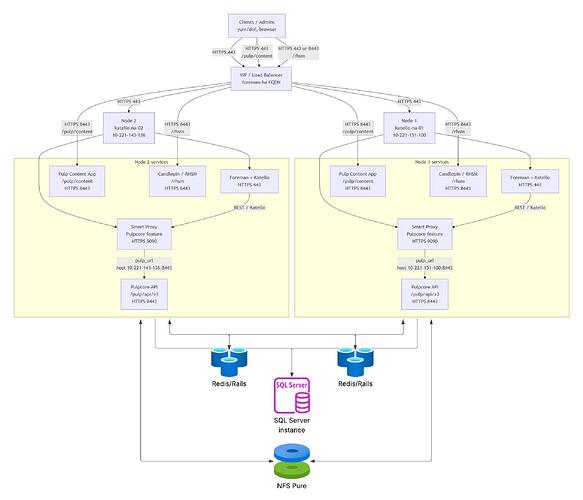

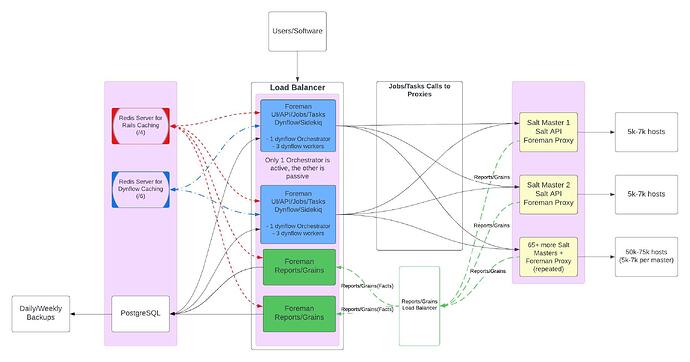

Here is our environment. We created our own High Availability and expandable Infrastructure. Yes, this is far off what Satellite recommends, I assume because of the complexities, but its all working.

Environment and context

-

Foreman with Katello 4.18.1

-

Pulp RPM client gem:

pulp_rpm_client-3.29.5 -

Pulpcore feature enabled on smart proxies

-

Pulp API is listening on port 8443 on the capsule

- Example Pulp URL:

https://10-221-143-136.redacted:8443

- Example Pulp URL:

-

Smart Proxy URLs (the Foreman side) use port 9090

- Example:

https://10-221-143-136.redacted:9090

- Example:

Goal: create an external AlmaLinux RPM repository through the Katello UI, which should create a Pulp RPM remote and then sync.

Observed: doing it in the Rails console works, doing it through the UI fails with an SSL error.

Initial symptom

From the Katello UI, when creating a new AlmaLinux repository, the “create remote” step failed with:

Faraday::SSLError

SSL_connect returned=1 errno=0 state=error: record layer failure

The same operation done by hand in foreman-rake console against the Pulp RPM client worked and successfully created a remote.

This immediately told us:

-

Pulp itself responds fine.

-

TLS and CA look OK when we drive the client manually.

-

The problem is specific to how Katello constructs and uses the Pulp client from the UI path.

Step 1. Exploring the Katello Pulp3 API classes

We first tried to follow some older advice that used a Base API class:

rpm_client = ::Katello::Pulp3::Api::Base.new(:rpm).client

This failed with:

NameError: uninitialized constant Katello::Pulp3::Api::Base

We then introspected Katello::Pulp3:

Katello::Pulp3.constants.sort

# => [:AlternateContentSource, :AnsibleCollection, :Api, :Content, ... :Rpm, :ServiceCommon, :Task, :TaskGroup]

Katello::Pulp3::Api.constants.sort

# => [:AnsibleCollection, :Apt, :ContentGuard, :Core, :Docker, :File, :Generic, :Yum]

So there is no Base, but there is Core and Yum.

We inspected methods:

y = Katello::Pulp3::Api::Yum

c = Katello::Pulp3::Api::Core

y.singleton_methods(false)

# => [:add_remove_content_class, :remote_uln_class, :copy_class, :rpm_package_group_class, :alternate_content_source_class]

y.instance_methods(false)

# => [:content_package_environments_api, :alternate_content_source_api, :content_modulemd_defaults_api, ... :content_package_groups_api]

c.instance_methods(false)

# => [:distributions_list_all, :remotes_list, :remotes_list_all, :repository_type=,

# :repair_class, :repository_versions_api, :get_remotes_api, ...]

We also checked the constructors:

Katello::Pulp3::Api::Core.instance_method(:initialize).parameters

# => [[:req, :smart_proxy], [:opt, :repository_type]]

Katello::Pulp3::Api::Yum.instance_method(:initialize).parameters

# => [[:req, :smart_proxy], [:opt, :repository_type]]

So both Core and Yum expect a SmartProxy (or a Core instance) and can build the Pulp clients.

Step 2. Proving that the Pulp RPM client works from the console

We wired things manually in foreman-rake console:

sp = SmartProxy.find_by(name: 'katello-na-02')

core = Katello::Pulp3::Api::Core.new(sp)

yum = Katello::Pulp3::Api::Yum.new(sp)

Then we used yum.get_remotes_api correctly by passing a URL:

rpm_remotes = yum.get_remotes_api(

url: 'https://repo.almalinux.org/almalinux/8/AppStream/x86_64/kickstart/'

)

rpm_client = rpm_remotes.api_client

cfg = rpm_client.config

cfg.base_url # => "https://10-221-143-136.redacted"

cfg.scheme # => "https"

cfg.host # => "10-221-143-136.redacted"

cfg.base_path # => ""

cfg.ssl_ca_file # was nil at first until we changed defaults

cfg.ssl_verify # => true

We then built a remote body:

body = {

name: "x86_64_kickstart-DEBUG",

url: "https://repo.almalinux.org/almalinux/8/AppStream/x86_64/kickstart/",

ca_cert: nil,

client_cert: nil,

client_key: nil,

tls_validation: true,

proxy_url: nil,

proxy_username: nil,

proxy_password: nil,

username: nil,

password: nil,

policy: "immediate",

total_timeout: 3600,

connect_timeout: 60,

sock_connect_timeout: 60,

sock_read_timeout: 3600,

rate_limit: 0

}

Our early attempts failed with SSL errors until we fixed the Pulp client config:

-

We set the correct Pulp port and base path:

cfg.host = '10-221-143-136.redacted:8443' cfg.base_path = '' # let the client add /pulp/api/v3 -

Enabled debugging and attached Rails logger:

cfg.debugging = true cfg.logger = Rails.logger

Once the host included :8443, the call succeeded:

remote = rpm_remotes.create(body)

remote.pulp_href

# => "/pulp/api/v3/remotes/rpm/rpm/019afef5-.../"

remote.url

# => "https://repo.almalinux.org/almalinux/8/AppStream/x86_64/kickstart/"

We also verified via:

rpm_remotes.list.results.each do |r|

puts "#{r.name} -> #{r.pulp_href}"

end

# x86_64_kickstart-DEBUG-1765219237 -> /pulp/api/v3/remotes/rpm/rpm/019aff44-.../

# x86_64_kickstart-DEBUG -> /pulp/api/v3/remotes/rpm/rpm/019afef5-.../

So:

-

The Pulp RPM client and Pulp API work fine when we explicitly target

host:8443. -

The SSL issue is not a generic TLS trust problem but a wrong endpoint issue.

Step 3. Trying to fix things via pulp_rpm_client Configuration defaults

We inspected the OpenAPI generated configuration class:

# /usr/share/gems/gems/pulp_rpm_client-3.29.5/lib/pulp_rpm_client/configuration.rb

module PulpRpmClient

class Configuration

attr_accessor :scheme, :host, :base_path, :ssl_verify, :ssl_ca_file, ...

def initialize

@scheme = 'https'

@host = '10-221-143-136.redacted:8443'

@base_path = ''

@ssl_verify = true

@ssl_ca_file = '/etc/pki/ca-trust/source/anchors/foreman-ha.pem'

@debugging = true

@logger = defined?(Rails) ? Rails.logger : Logger.new(STDOUT)

...

end

def host=(host)

# remove http(s):// and anything after a slash

@host = host.sub(/https?:\/\//, '').split('/').first

end

def base_path=(base_path)

@base_path = "/#{base_path}".gsub(/\/+/, '/')

@base_path = '' if @base_path == '/'

end

...

end

end

We confirmed that the defaults were applied:

cfg_new = PulpRpmClient::Configuration.new

cfg_new.ssl_ca_file

# => "/etc/pki/ca-trust/source/anchors/foreman-ha.pem"

PulpRpmClient::Configuration.default.ssl_ca_file

# => "/etc/pki/ca-trust/source/anchors/foreman-ha.pem"

In the console, this worked nicely. But when the UI path ran, we still got Faraday::SSLError, even though Configuration.default looked correct.

This suggested that something in Katello was overriding our host during pulp3_configuration, not that the defaults were wrong.

Step 4. Adding heavy logging to PulpRpmClient::Configuration

To see what was actually happening during a UI call, we added logging into configuration.rb, for example:

def initialize

@scheme = 'https'

@host = '10-221-143-136.redacted:8443'

@base_path = ''

@ssl_verify = true

@ssl_ca_file = '/etc/pki/ca-trust/source/anchors/foreman-ha.pem'

@debugging = true

@logger = defined?(Rails) ? Rails.logger : Logger.new(STDOUT)

if defined?(Rails)

Rails.logger.warn(

"[PulpRpmClient::Configuration] initialize(before yield): " \

"object_id=#{object_id} scheme=#{@scheme} host=#{@host} base_path=#{@base_path.inspect} " \

"ssl_verify=#{@ssl_verify} ssl_ca_file=#{@ssl_ca_file.inspect}"

)

end

yield(self) if block_given?

if defined?(Rails)

Rails.logger.warn(

"[PulpRpmClient::Configuration] initialize(after yield): " \

"object_id=#{object_id} scheme=#{@scheme} host=#{@host} base_path=#{@base_path.inspect} " \

"ssl_verify=#{@ssl_verify} ssl_ca_file=#{@ssl_ca_file.inspect}"

)

end

end

def host=(host)

cleaned = host.sub(/https?:\/\//, '').split('/').first

Rails.logger.warn(

"[PulpRpmClient::Configuration] host= called with #{host.inspect}, " \

"cleaned=#{cleaned.inspect} (object_id=#{object_id})"

) if defined?(Rails)

@host = cleaned

Rails.logger.warn(

"[PulpRpmClient::Configuration] host set to #{@host.inspect} (object_id=#{object_id})"

) if defined?(Rails)

end

def configure_faraday_connection(&block)

@configure_connection_blocks << block

end

def configure_connection(conn)

Rails.logger.warn(

"[PulpRpmClient::Configuration] configure_connection: before applying blocks " \

"url=#{base_url} scheme=#{scheme} host=#{host} base_path=#{base_path.inspect} " \

"ssl_verify=#{ssl_verify} ssl_ca_file=#{ssl_ca_file.inspect} (object_id=#{object_id})"

) if defined?(Rails)

Rails.logger.warn(

"[PulpRpmClient::Configuration] configure_connection: conn.class=#{conn.class}"

) if defined?(Rails)

Rails.logger.warn(

"[PulpRpmClient::Configuration] configure_connection: conn.builder.handlers(before)=#{conn.builder.handlers.inspect}"

) if defined?(Rails)

@configure_connection_blocks.each { |b| b.call(conn) }

Rails.logger.warn(

"[PulpRpmClient::Configuration] configure_connection: conn.builder.handlers(after)=#{conn.builder.handlers.inspect}"

) if defined?(Rails)

Rails.logger.warn(

"[PulpRpmClient::Configuration] configure_connection: finished for object_id=#{object_id}"

) if defined?(Rails)

end

Then we triggered a repo create in the UI and looked at /var/log/foreman/production.log:

2025-12-09T16:29:56 [W|app|1bdbb1ea] [PulpRpmClient::Configuration]

initialize(before yield): object_id=507060 scheme=https host=10-221-143-136.redacted:8443 base_path="" ssl_verify=true ssl_ca_file="/etc/pki/ca-trust/source/anchors/foreman-ha.pem"

2025-12-09T16:29:56 [W|app|1bdbb1ea] [PulpRpmClient::Configuration]

host= called with "10-221-151-100.redacted", cleaned="10-221-151-100.redacted" (object_id=507060)

2025-12-09T16:29:56 [W|app|1bdbb1ea] [PulpRpmClient::Configuration]

host set to "10-221-151-100.redacted" (object_id=507060)

2025-12-09T16:29:56 [W|app|1bdbb1ea] [PulpRpmClient::Configuration]

ssl_ca_file= called with "/etc/pki/ca-trust/source/anchors/foreman-ha.pem" (object_id=507060)

2025-12-09T16:29:56 [W|app|1bdbb1ea] [PulpRpmClient::Configuration]

initialize(after yield): object_id=507060 scheme=https host=10-221-151-100.redacted base_path="" ssl_verify=true ssl_ca_file="/etc/pki/ca-trust/source/anchors/foreman-ha.pem"

Key observations:

-

The Configuration starts life with

host=10-221-143-136.redacted:8443from our defaults. -

Then something calls

config.host = "10-221-151-100.redacted"without the port. -

After the block,

hostis the bare hostname. The port information is gone. -

When the Pulp client then builds the URL, it uses

https://10-221-151-100.redactedwhich effectively targets port 443 instead of 8443. -

That explains the SSL record layer failure: the client is talking TLS to the wrong service.

So the problem is not in the Configuration class itself, but in the Katello code that calls PulpRpmClient::Configuration.new and then sets host.

Step 5. Following the call stack into Katello

The log also included the Ruby call stack at the moment Configuration was created:

[PulpRpmClient::Configuration] initialize caller:

/usr/share/gems/gems/katello-4.18.1/app/models/katello/concerns/smart_proxy_extensions.rb:332:in `new'

/usr/share/gems/gems/katello-4.18.1/app/models/katello/concerns/smart_proxy_extensions.rb:332:in `pulp3_configuration'

/usr/share/gems/gems/katello-4.18.1/app/services/katello/pulp3/api/core.rb:33:in `api_client'

/usr/share/gems/gems/katello-4.18.1/app/services/katello/pulp3/api/core.rb:43:in `remotes_api'

/usr/share/gems/gems/katello-4.18.1/app/services/katello/pulp3/api/yum.rb:42:in `get_remotes_api'

/usr/share/gems/gems/katello-4.18.1/app/services/katello/pulp3/service_common.rb:12:in `block in create_remote'

...

We opened /usr/share/gems/gems/katello-4.18.1/app/models/katello/concerns/smart_proxy_extensions.rb and found:

def pulp3_configuration(config_class)

config_class.new do |config|

uri = pulp3_uri!

config.host = uri.host

config.scheme = uri.scheme

pulp3_ssl_configuration(config)

config.debugging = ::Foreman::Logging.logger('katello/pulp_rest').debug?

config.timeout = SETTINGS[:katello][:rest_client_timeout]

config.logger = ::Foreman::Logging.logger('katello/pulp_rest')

config.username = self.setting(PULP3_FEATURE, 'username')

config.password = self.setting(PULP3_FEATURE, 'password')

end

end

And:

def pulp3_uri!

url = self.setting(PULP3_FEATURE, 'pulp_url')

fail "Cannot determine pulp3 url, check smart proxy configuration" unless url

URI.parse(url)

end

We verified that pulp3_uri! returns the correct URL with port 8443 for each proxy:

SmartProxy.

joins(:features).

where(features: { name: 'Pulpcore' }).

find_each do |sp|

uri = sp.send(:pulp3_uri!)

puts "SmartProxy ##{sp.id} #{sp.name}"

puts " url: #{sp.url.inspect}"

puts " pulp3_uri!: #{uri.to_s.inspect}"

puts " host=#{uri.host.inspect} port=#{uri.port.inspect} path=#{uri.path.inspect}"

end

Output:

SmartProxy #2 katello-na-01

url: "https://10-221-151-100.redacted:9090"

pulp3_uri!: "https://10-221-151-100.redacted:8443"

host="10-221-151-100.redacted" port=8443 path=""

SmartProxy #5 katello-na-02

url: "https://10-221-143-136.redacted:9090"

pulp3_uri!: "https://10-221-143-136.redacted:8443"

host="10-221-143-136.redacted" port=8443 path=""

SmartProxy #6 katello-proxy-kc-01

url: "https://10-221-150-61.redacted:9090"

pulp3_uri!: "https://10-221-150-61.redacted"

host="10-221-150-61.redacted" port=443 path=""

SmartProxy #7 katello-proxy-kc-02

url: "https://10-221-137-125.redacted:9090"

pulp3_uri!: "https://10-221-137-125.redacted"

host="10-221-137-125.redacted" port=443 path=""

So:

-

The Pulp URL stored in the smart proxy settings (

pulp_url) already includes:8443where appropriate. -

However,

pulp3_configurationusesuri.host, which discards the port. -

The

PulpRpmClient::Configurationthen ends up withhostset to the bare hostname and no port, so the client targets port 443.

That is the core bug.

Our earlier attempts to fix this inside PulpRpmClient::Configuration#host= did not help, because the loss of port happens before that, when config.host = uri.host is called.

Step 6. The actual fix that solved everything

We fixed the problem by patching pulp3_configuration to preserve the port when it is non standard.

Original:

def pulp3_configuration(config_class)

config_class.new do |config|

uri = pulp3_uri!

config.host = uri.host

config.scheme = uri.scheme

pulp3_ssl_configuration(config)

...

end

end

Patched:

def pulp3_configuration(config_class)

config_class.new do |config|

uri = pulp3_uri!

# Preserve port if it is non default

host_with_port =

if uri.port && ![80, 443].include?(uri.port)

"#{uri.host}:#{uri.port}"

else

uri.host

end

config.host = host_with_port

config.scheme = uri.scheme

# If pulp_url ever contains a path, also honor it

if uri.path && !uri.path.empty? && uri.path != '/'

config.base_path = uri.path

end

pulp3_ssl_configuration(config)

config.debugging = ::Foreman::Logging.logger('katello/pulp_rest').debug?

config.timeout = SETTINGS[:katello][:rest_client_timeout]

config.logger = ::Foreman::Logging.logger('katello/pulp_rest')

config.username = self.setting(PULP3_FEATURE, 'username')

config.password = self.setting(PULP3_FEATURE, 'password')

end

end

After restarting Foreman and Dynflow services, we repeated the test and saw in the log:

[PulpRpmClient::Configuration] initialize(before yield): object_id=505800 scheme=https host=10-221-143-136.redacted:8443 ...

[PulpRpmClient::Configuration] host= called with "10-221-143-136.redacted:8443", cleaned="10-221-143-136.redacted:8443"

[PulpRpmClient::Configuration] initialize(after yield): object_id=505800 scheme=https host=10-221-143-136.redacted:8443 ...

Now the host keeps the port 8443 all the way through. Creating a repository from the UI no longer produces the Faraday SSL error and the Pulp remote is created successfully, just as it is when we drive it from the console.

What we tried that did not work

For completeness, here are some of the things we tried that turned out to be red herrings or only partly helpful:

-

Editing

/etc/foreman/plugins/katello.yamlpulp_url

We tried adding apulp_urlthere, but that is not where Katello is reading the Pulp URL for Pulpcore. The source of truth is the Smart Proxy settings for the Pulpcore feature (setting(PULP3_FEATURE, 'pulp_url')). -

Tweaking

pulpcode.yamlon the proxy

We changed fields like:pulp_urland:content_app_urlto include variations of the host, including dummy suffixes likecloudsdf:8443, then searched logs. These URLs did not show up in the Pulp RPM client path that was failing. That file controls the proxy side and content serving, not the client library used by Katello to talk to Pulpcore. -

Overriding

PulpRpmClient::Configuration#host=to force:8443

We tried:def host=(host) cleaned = host.sub(/https?:\/\//, '').split('/').first if cleaned == '10-221-143-136.redacted' cleaned = '10-221-143-136.redacted:8443' end @host = cleaned endThis did not solve the UI path because the real problem was that Katello was feeding

uri.hostwhich already lost the port. On top of that, this approach is brittle and infrastructure specific. -

Assuming a TLS CA problem

We checked and setssl_ca_fileandssl_verifyinconfiguration.rband verified that console calls worked, which made it very tempting to blame CA trust. The logging later made clear that the real failure was at the TCP/TLS endpoint level, not CA trust.

Proposed change for Katello upstream

From the perspective of the Katello codebase, the bug is that pulp3_configuration ignores the port that is already present in the pulp_url smart proxy setting.

pulp3_uri! already correctly returns a URI object that includes a port. For example:

pulp_url = 'https://capsule.example.com:8443'

uri = URI.parse(pulp_url)

uri.host # => "capsule.example.com"

uri.port # => 8443

Current code:

config.host = uri.host # loses port information

config.scheme = uri.scheme

Expected behavior:

-

If

pulp_urlcontains an explicit non default port, the Pulp client should connect to that port. -

If

pulp_urldoes not specify a port, the client should default to 443 for https and 80 for http. -

If

pulp_urlcontains a path, the Pulp client configuration should setbase_pathaccordingly.

Suggested patch:

def pulp3_configuration(config_class)

config_class.new do |config|

uri = pulp3_uri!

host_with_port =

if uri.port && ![80, 443].include?(uri.port)

"#{uri.host}:#{uri.port}"

else

uri.host

end

config.host = host_with_port

config.scheme = uri.scheme

if uri.path && !uri.path.empty? && uri.path != '/'

config.base_path = uri.path

end

pulp3_ssl_configuration(config)

config.debugging = ::Foreman::Logging.logger('katello/pulp_rest').debug?

config.timeout = SETTINGS[:katello][:rest_client_timeout]

config.logger = ::Foreman::Logging.logger('katello/pulp_rest')

config.username = self.setting(PULP3_FEATURE, 'username')

config.password = self.setting(PULP3_FEATURE, 'password')

end

end

This is backward compatible and respects the administrator defined pulp_url setting, including non default ports.

Why this matters

Many environments terminate TLS for Pulpcore on a port that is not 443. For example:

-

Reverse proxy in front of Pulp on 8443

-

Custom firewall or load balancer rules that keep 443 for Foreman UI and 8443 for back end services

In such setups:

-

Pulp content app and Pulp API can sit on any port, not just 8443.

-

Smart Proxy

pulp_urlis correctly defined with:8443. -

Katello currently discards the port via

uri.host, and the OpenAPI client then talks TLS to the wrong endpoint on port 443. -

This manifests as a generic

Faraday::SSLErrorwith “record layer failure”, which is misleading without deep logging.

Honoring the port from pulp_url avoids that failure, aligns UI behavior with what we can already do in the console, and makes Katello more robust for varied infrastructure designs.