Problem: Schedule Remote Job fails on CentOS 8 / Foreman 2.1rc2

Expected outcome: Same system build procedure works on CentOS 7 / Foreman 2.1rc2

Foreman and Proxy versions: 2.1rc2 repositories for CentOS 7 and CentOS 8

**Foreman and Proxy plugin versions:**2.1rc2 repositories for CentOS 7 and CentOS 8

Distribution and version: CentOS 8.1 x86_64 (updated with all available patches)

NOTE: I have two files I’d like to attach to this posting (build notes and journalctl log), but new users are not permitted to upload attachments (a reasonable restriction). So I hope this post has enough details.

Greetings,

I’m new to foreman, but not to programming and system administration related to provisioning but mostly automation, I have been do that for over two decades ![]()

I’m starting on the bleeding edge with CentOS 8.1 and Foreman 2.1rc{1,2}, and have managed to get a Foreman VM up and going for the provisioning aspects. I moved on to Remote Execution as it a prerequisite for Ansible functionality which I would like to explore. (Also I have a Katello integration question as a postscript)

I have created detailed notes on the build procedure used to reproduce the problem in a clean (and minimal) environment, but would like assistance in debugging the problem (I’m not ruby or web developer), before raising it it as a bug.

On submitting a “Schedule Remote Job” request on CentOS 8 (but not CentOS 7), the key points as I see them are:

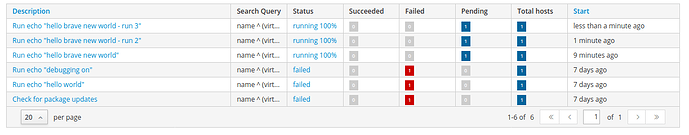

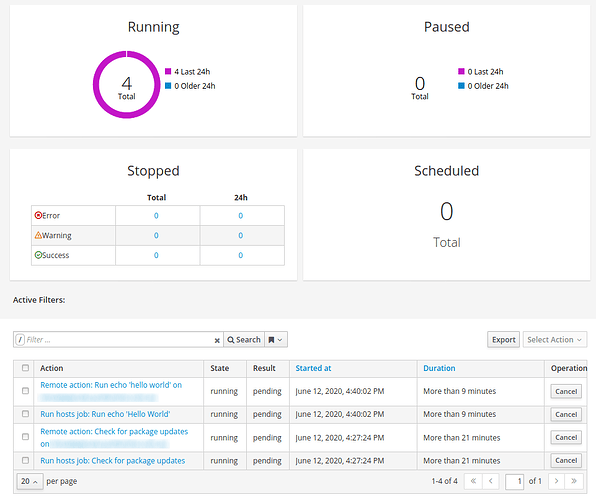

WebUI (CentOS8) - clicking on the host name on job failure:

1: Initialization error: RestClient::NotFound - 404 Not Found

2: Failed to initialize: TypeError - Value (ActiveSupport::TimeWithZone) '2020-06-05 15:10:46 +1000' is not any of: (Time | NilClass).

From journalctl (see details further down), instances of:

‘ERROR -- /client-dispatcher: Could not find an executor for Dynflow::Dispatcher::Envelope’

/var/log/foreman-proxy/proxy.log (CentOS8):

dateTtime hexcode Started GET /version

dateTtime hexcode Finished GET /version with 200 (0.35 ms)

dateTtime hexcode Started POST /dynflow/tasks/launch

dateTtime hexcode Finished POST /dynflow/tasks/launch with 404 (16.86 ms) ***

dateTtime hexcode Started POST /dynflow/tasks/

dateTtime hexcode Finished POST /dynflow/tasks/ with 500 (34.28 ms) ***

dateTtime hexcode Started GET /dynflow/tasks/status

dateTtime hexcode Finished GET /dynflow/tasks/status with 404 (21.94 ms) ***

dateTtime hexcode Started POST /dynflow/tasks/launch

dateTtime hexcode Finished POST /dynflow/tasks/launch with 404 (13.92 ms) ***

dateTtime hexcode Started POST /dynflow/tasks/

dateTtime hexcode Finished POST /dynflow/tasks/ with 500 (33.12 ms) ***

/var/log/foreman-proxy/smart_proxy_dynflow_core.log (CentOS8):

IP - - Date/Time "GET /tasks/count?state=running HTTP/1.1" 200 29

IP - - Date/Time "POST /tasks/launch? HTTP/1.1" 404 46 ***

IP - - Date/Time "POST /tasks/? HTTP/1.1" 500 153486 ***

IP - - Date/Time "GET /tasks/status? HTTP/1.1" 404 555 ***

IP - - Date/Time "POST /tasks/launch? HTTP/1.1" 404 46 ***

IP - - Date/Time "POST /tasks/? HTTP/1.1" 500 153525 ***

IP - - Date/Time "GET /tasks/count?state=running HTTP/1.1" 200 29

IP - - Date/Time "POST /tasks/launch? HTTP/1.1" 404 46 ***

IP - - Date/Time "POST /tasks/? HTTP/1.1" 500 153524 ***

IP - - Date/Time "GET /tasks/status? HTTP/1.1" 404 555 ***

IP - - Date/Time "POST /tasks/launch? HTTP/1.1" 404 46 ***

IP - - Date/Time "POST /tasks/? HTTP/1.1" 500 153524 ***

From journalctl | egrep -i ‘dynflow|proxy|foreman’ (CentOS8):

timestamp hostname smart-proxy[9550]: IPv4Address - - [05/Jun/2020:15:02:31 AEST] "POST /dynflow/tasks/launch HTTP/1.1" 404 46

timestamp hostname smart-proxy[9550]: - -> /dynflow/tasks/launch

timestamp hostname smart-proxy[9550]: IPv4Address - - [05/Jun/2020:15:02:31 AEST] "POST /dynflow/tasks/ HTTP/1.1" 500 153525

timestamp hostname smart-proxy[9550]: - -> /dynflow/tasks/

timestamp hostname dynflow-sidekiq@worker[9431]: E, [2020-06-05T15:02:31.678240 #9431] ERROR -- /client-dispatcher: Could not find an executor for Dynflow::Dispatcher::Envelope[request_id: 118e2f8d-9b56-4063-844d-9e9a4d96fb37-4, sender_id: 118e2f8d-9b56-4063-844d-9e9a4d96fb37, receiver_id: Dynflow::Dispatcher::UnknownWorld, message: Dynflow::Dispatcher::Event[execution_plan_id: 2f734f26-5a69-4be1-bdcf-d82d2e288559, step_id: 3, event: #<Actions::ProxyAction::ProxyActionStopped:0x0000559e3a57cf00>, time: ]] (Dynflow::Error) ***

timestamp hostname dynflow-sidekiq@worker[9431]: client_dispatcher.rb:147:in `dispatch_request'

timestamp hostname dynflow-sidekiq@worker[9431]: client_dispatcher.rb:118:in `block (2 levels) in publish_request'

timestamp hostname dynflow-sidekiq@worker[9431]: client_dispatcher.rb:206:in `track_request'

timestamp hostname dynflow-sidekiq@worker[9431]: client_dispatcher.rb:117:in `block in publish_request'

timestamp hostname dynflow-sidekiq@worker[9431]: client_dispatcher.rb:248:in `with_ping_request_caching'

timestamp hostname dynflow-sidekiq@worker[9431]: client_dispatcher.rb:116:in `publish_request'

timestamp hostname dynflow-sidekiq@worker[9431]: [ concurrent-ruby ]

…

Note: client_dispatcher.rb as actually /usr/share/gems/gems/dynflow-1.4.4/lib/dynflow/dispatcher/client_dispatcher.rb

I have then enabled ‘:log_level: DEBUG’ in /etc/smart_proxy_dynflow_core/settings.yml and

/etc/foreman-proxy/settings.yml and have included the output in the attached build procedure, but the above are the highlights.

Key Points: Foreman 2.1rc2 / CentOS 8 / Remote Execution → Fails

I hope that the details provided in the attached text file will give any one able to help a clear view of the steps taken to date and the results seen on CentOS 7 (working) versus CentOS 8 (not working).

Many Thanks in Advance,

Peter

Sydney, Australia

PS: One quick question, if you don’t mind: It is my understanding from my reading to date, that Katello and Pulp (which worked out of the box on CentOS 7), are intended to run on a separate system from the main Foreman instance. Is this correct? And if so does anyone have a pointer to a write-up on the design of the larger system and the procedure to integrate Foreman and Katello/Pulp?