As the saying goes: If it is not tested, it doesn’t work

and another saying goes: Testing can only show the presence of bugs, never there absence

Summary: Ansible (“Run all ansible roles”) fails on CentOS 8

After installing the ansible features as described above on both CentOS 7.8 and CentOS 8.2 VM previously mentioned in the posting, we have the following installed:

CentOS 7:

sudo yum list installed | grep ansible

ansible.noarch 2.9.9-1.el7 @epel

ansible-runner.noarch 1.4.6-1.el7 @ansible-runner

python2-ansible-runner.noarch 1.4.6-1.el7 @ansible-runner

python2-daemon.noarch 2.1.2-7.el7at @ansible-runner

python2-pexpect.noarch 4.6-1.el7at @ansible-runner

python2-ptyprocess.noarch 0.5.2-3.el7at @ansible-runner

tfm-rubygem-foreman_ansible.noarch 5.0.1-1.fm2_1.el7 @foreman-plugins

tfm-rubygem-foreman_ansible_core.noarch 3.0.3-1.fm2_1.el7 @foreman-plugins

tfm-rubygem-hammer_cli_foreman_ansible.noarch

tfm-rubygem-smart_proxy_ansible.noarch 3.0.1-5.fm2_1.el7 @foreman-plugins

CentOS 8:

sudo yum list installed | grep ansible

ansible.noarch 2.9.9-2.el8 @ansible

ansible-runner.noarch 1.4.6-1.el8 @ansible-runner

centos-release-ansible-29.noarch 1-2.el8 @extras

python3-ansible-runner.noarch 1.4.6-1.el8 @ansible-runner

python3-daemon.noarch 2.1.2-9.el8ar @ansible-runner

python3-lockfile.noarch 1:0.11.0-8.el8ar @ansible-runner

python3-pexpect.noarch 4.6-2.el8ar @ansible-runner

rubygem-foreman_ansible.noarch 5.1.1-1.fm2_1.el8 @foreman-plugins

rubygem-foreman_ansible_core.noarch 3.0.3-1.fm2_1.el8 @foreman-plugins

rubygem-hammer_cli_foreman_ansible.noarch 0.3.2-1.fm2_1.el8 @foreman-plugins

rubygem-smart_proxy_ansible.noarch 3.0.1-5.fm2_1.el8 @foreman-plugins

sshpass.x86_64 1.06-8.el8 @ansible

Unless stated otherwise, commands are being performed on both VMs.

sudo find /etc -name ansible.cfg

/etc/foreman-proxy/ansible.cfg

/etc/ansible/ansible.cfg

sudo egrep -v '^#' /etc/foreman-proxy/ansible.cfg

sudo egrep -v '^#' /etc/ansible/ansible.cfg | uniq

As section heading can only appear once in ansible.cfg, and there is no (actual) default configuration for ansible, lets just replace the package manager provided configuration, with the one foreman-installer generated.

sudo cp /etc/ansible/ansible.cfg /etc/ansible/ansible.cfg.orig

sudo cp /etc/foreman-proxy/ansible.cfg /etc/ansible/ansible.cfg

ls -l /etc/foreman-proxy/ansible.cfg /etc/ansible/ansible.cfg

# Verify command line ansible is functional

ansible -m ping localhost

localhost | SUCCESS => {

"changed": false,

"ping": "pong"

}

ansible -a 'cat /etc/redhat-release' localhost

localhost | CHANGED | rc=0 >>

CentOS 7: CentOS Linux release 7.8.2003 (Core)

CentOS 8: CentOS Linux release 8.2.2004 (Core)

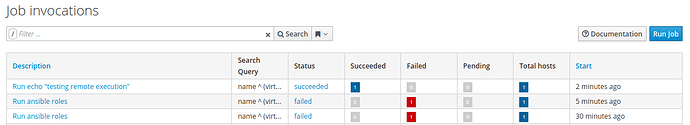

I than selected the WebUI → Hosts → All Hosts → Select Action: Run all ansible roles

(which is running against the Foreman host itself)

This completed successfully after a few seconds on CentOS 7 but failed on CentOS 8.

Given I had not updated either system for a few days, I did a sudo yum upgrade on both systems. Only CentOS 7 had updates to apply and they are listed at the end of this posting. I followed this by a sudo foreman-maintain service restart on BOTH systems.

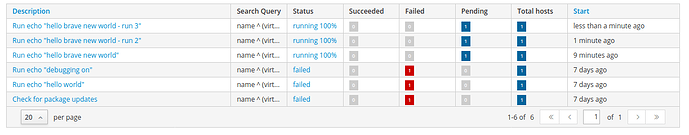

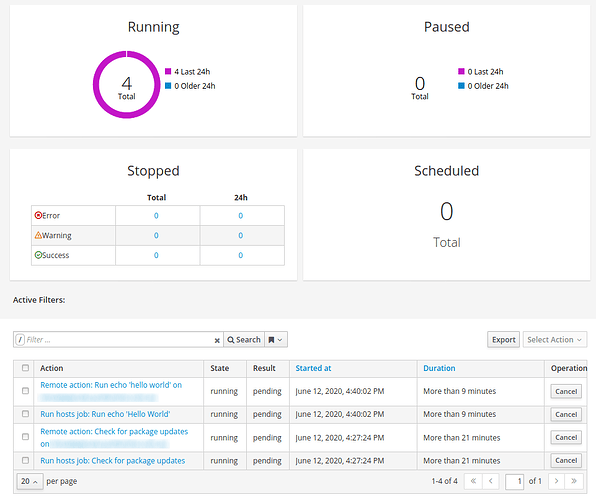

The test was repeated with the same results (CentOS 7: Success & CentOS 8: Failed). I further tested just remote execution, and it was functional, as can be seen in the following screen snap (from the CentOS 8 WebUI):

I reviewed the backtrace / stacktrace in each failure and they are the same (no differences at all), and it is attached here:

CentOS8-Run1.stacktrace.log (9.4 KB)

The following log files don’t add any value, that I can see:

/var/log/foreman-proxy/smart_proxy_dynflow_core.log

/var/log/foreman-proxy/proxy.log

/var/log/foreman/production.log

Regards,

Peter

The CentOS 7 packages updated

Package Arch Version Repository Size

=====================================================================================================================================================

Updating:

tfm-rubygem-foreman-tasks noarch 2.0.0-1.fm2_1.el7 foreman-plugins 2.2 M

tfm-rubygem-foreman_ansible noarch 5.1.1-1.fm2_1.el7 foreman-plugins 2.0 M

tfm-rubygem-foreman_remote_execution noarch 3.3.1-1.fm2_1.el7 foreman-plugins 1.6 M

Transaction Summary

=====================================================================================================================================================

Upgrade 3 Packages