Problem:

In my setup I have created a lot of Hostgroups using Ansible, setting up LCE, OS settings (‘the works’). This used to work fine when deploying new discovered hosts. My workflow was:

- Boot new lab VM

- Wait for it to show in Foreman

- Click ‘Provision’

- Select Hostgroup, click ‘Customize’

- Set hostname

- Click ‘Submit’

And then Foreman would go it’s merry way and install an OS on the system.

However, something changed (I don’t know what, as I can’t find anything useful in logs with regards to errors and such):

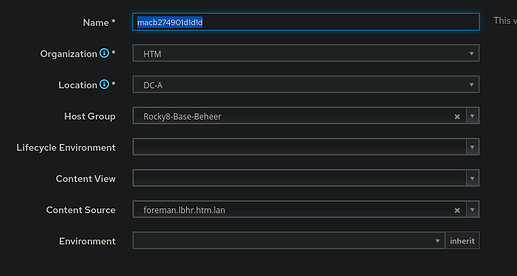

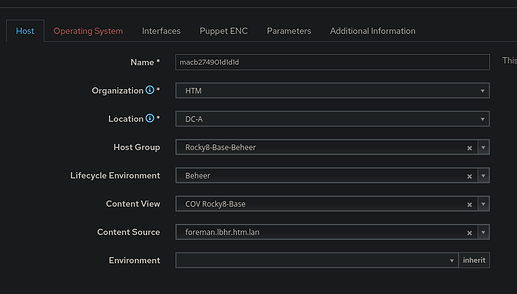

1. Hostgroup settings are not fully applied when you are in the host customization page

a. The LCE and CV are not set

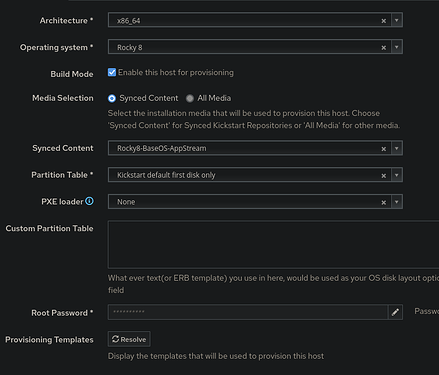

b. The OS tab setting are shown, not set

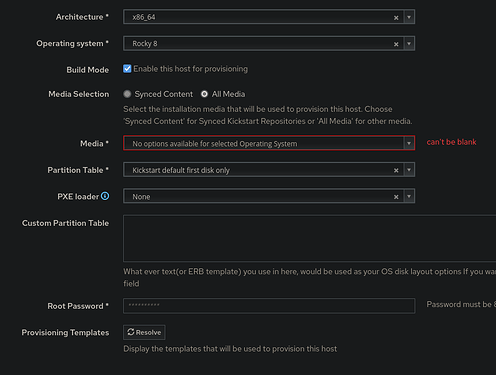

When opening the discovered host’s detail page, I noticed that the LCE and CV fields are empty. And the OS settings are shown as configured on the host group. However, when clicking ‘Submit’ after setting the hostname, I receive an error on the fields on the OS page as they are now magically empty. Below are the full steps I take:

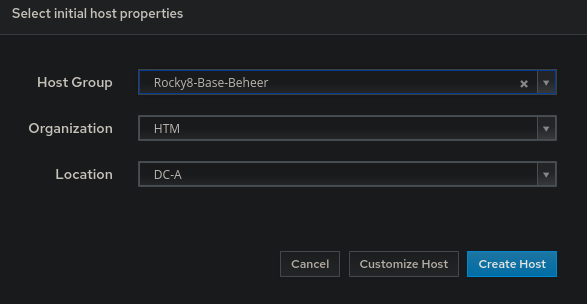

- Select Host, click ‘Provision’

- Set the Host Group and click ‘Customize’

- The LCE and CV fields are now empty (while the Host Group has them defined)

- Blissfully ignoring that, moving on to OS settings, I see all settings are present as seen on the Host Group. Click ‘Submit’

- See the error and changed settings on the same page

- Go back to the first tab, LCE and CV are suddenly present…

2. This ends in 404

This procedure has mostly the same results as the pictures above, so I didn’t make 'm again ![]()

- Select Host, click ‘Provision’

- Do not set a Host Group, just click ‘Customize’

- Set Host group, check settings (both Host and OS), all in order

- Click ‘Submit’ → 404

- Check

/var/log/foreman/production.log:

2021-11-14T23:04:16 [I|app|09b5af1b] Started POST "/api/v2/discovered_hosts/facts" for 192.168.255.154 at 2021-11-14 23:04:16 +0100

2021-11-14T23:04:16 [I|app|09b5af1b] Processing by Api::V2::DiscoveredHostsController#facts as JSON

2021-11-14T23:04:16 [I|app|09b5af1b] Parameters: {"facts"=>"[FILTERED]", "apiv"=>"v2", "discovered_host"=>{"facts"=>"[FILTERED]"}}

2021-11-14T23:04:16 [I|app|09b5af1b] Import facts for 'macb274901d1d1d' completed. Added: 0, Updated: 0, Deleted 0 facts

2021-11-14T23:04:16 [I|app|09b5af1b] Detected IPv4 subnet: Beheer with taxonomy ["HTM"]/["DC-A"]

2021-11-14T23:04:16 [I|app|09b5af1b] Assigned location: DC-A

2021-11-14T23:04:16 [I|app|09b5af1b] Assigned organization: HTM

2021-11-14T23:04:16 [I|app|09b5af1b] Completed 201 Created in 147ms (Views: 0.9ms | ActiveRecord: 35.0ms | Allocations: 44472)

2021-11-14T23:04:23 [I|app|cb29cbce] Started PATCH "/hosts/4" for 192.168.255.1 at 2021-11-14 23:04:23 +0100

2021-11-14T23:04:23 [I|app|cb29cbce] Processing by HostsController#update as */*

2021-11-14T23:04:23 [I|app|cb29cbce] Parameters: {"utf8"=>"✓", "authenticity_token"=>"dFOTYcDiBxzb/pKZwBjaCcckJveAjBgkeogEdq6RmlaG6iNxE+olHtwVhS55EiWEzK0kOim5RjyEbP1cTpzWPg==", "host"=>{"name"=>"macb274901d1d1d", "hostgroup_id"=>"1", "content_facet_attributes"=>{"lifecycle_environment_id"=>"2", "content_view_id"=>"12", "content_source_id"=>"1", "kickstart_repository_id"=>"53"}, "puppet_attributes"=>{"environment_id"=>""}, "managed"=>"true", "progress_report_id"=>"[FILTERED]", "type"=>"Host::Managed", "interfaces_attributes"=>{"0"=>{"_destroy"=>"0", "mac"=>"b2:74:90:1d:1d:1d", "identifier"=>"eth0", "name"=>"macb274901d1d1d", "domain_id"=>"1", "subnet_id"=>"1", "ip"=>"192.168.255.154", "ip6"=>"", "managed"=>"1", "primary"=>"1", "provision"=>"1", "execution"=>"1", "tag"=>"", "attached_to"=>"", "id"=>"4"}}, "architecture_id"=>"1", "operatingsystem_id"=>"2", "build"=>"1", "medium_id"=>"", "ptable_id"=>"167", "pxe_loader"=>"None", "disk"=>"", "root_pass"=>"[FILTERED]", "is_owned_by"=>"4-Users", "enabled"=>"1", "model_id"=>"1", "comment"=>"", "overwrite"=>"false"}, "media_selector"=>"synced_content", "id"=>"4"}

2021-11-14T23:04:23 [I|app|cb29cbce] Rendering common/404.html.erb within layouts/application

2021-11-14T23:04:23 [I|app|cb29cbce] Rendered common/404.html.erb within layouts/application (Duration: 3.5ms | Allocations: 5823)

2021-11-14T23:04:23 [I|app|cb29cbce] Rendered layouts/_application_content.html.erb (Duration: 3.0ms | Allocations: 5608)

2021-11-14T23:04:23 [I|app|cb29cbce] Rendering layouts/base.html.erb

2021-11-14T23:04:23 [I|app|cb29cbce] Rendered layouts/base.html.erb (Duration: 5.7ms | Allocations: 7609)

2021-11-14T23:04:23 [I|app|cb29cbce] Completed 404 Not Found in 28ms (Views: 16.1ms | ActiveRecord: 2.4ms | Allocations: 28478)

Expected outcome: Provision the host without complaining (or requiring the user to completely redo all settings that are already set on the host group)

Foreman and Proxy versions: 3.0.1

Foreman and Proxy plugin versions: Katello 4.2

Distribution and version: Rocky 8.4

Other relevant data:

as I’m not tied up in client work then).

as I’m not tied up in client work then).

)

) yay, it’s still broken (that’s not good, but that means I can reproduce it

yay, it’s still broken (that’s not good, but that means I can reproduce it