After upgrading to Foreman 3.8/Katello 4.10 my synchronization tasks to my proxies are super slow.

Looking back to the tasks using Foreman 3.7/Katello 4.9, after adding 1 package to a repo and syncing that repo and let Foreman sync the meta data of that package to my proxies took 20-60 seconds. With Foreman 3.8/Katello 4.10 it takes ~8 minutes.

My reference is a sync to the proxy that is on the same hardware/network as the Foreman server so no latency to speak of. Anyone else noticed something similar?

Installed Packages

- ansible-collection-theforeman-foreman-3.14.0-1.el8.noarch

- ansiblerole-foreman_scap_client-0.2.0-2.el8.noarch

- candlepin-4.3.1-1.el8.noarch

- candlepin-selinux-4.3.1-1.el8.noarch

- foreman-3.8.0-1.el8.noarch

- foreman-cli-3.8.0-1.el8.noarch

- foreman-debug-3.8.0-1.el8.noarch

- foreman-dynflow-sidekiq-3.8.0-1.el8.noarch

- foreman-installer-3.8.0-2.el8.noarch

- foreman-installer-katello-3.8.0-2.el8.noarch

- foreman-obsolete-packages-1.5-1.el8.noarch

- foreman-postgresql-3.8.0-1.el8.noarch

- foreman-proxy-3.8.0-1.el8.noarch

- foreman-release-3.8.0-1.el8.noarch

- foreman-selinux-3.8.0-1.el8.noarch

- foreman-service-3.8.0-1.el8.noarch

- katello-4.10.0-1.el8.noarch

- katello-certs-tools-2.9.0-2.el8.noarch

- katello-client-bootstrap-1.7.9-2.el8.noarch

- katello-common-4.10.0-1.el8.noarch

- katello-debug-4.10.0-1.el8.noarch

- katello-repos-4.10.0-1.el8.noarch

- katello-selinux-5.0.2-1.el8.noarch

- pulpcore-obsolete-packages-1.0-2.el8.noarch

- pulpcore-selinux-1.3.3-1.el8.x86_64

- python39-pulp-ansible-0.18.1-1.el8.noarch

- python39-pulp-certguard-1.6.5-1.el8.noarch

- python39-pulp-cli-0.21.2-1.el8.noarch

- python39-pulp-container-2.15.2-1.el8.noarch

- python39-pulp-deb-3.0.0-1.el8.noarch

- python39-pulp-file-1.14.3-1.el8.noarch

- python39-pulp-glue-0.21.2-1.el8.noarch

- python39-pulp-python-3.10.0-1.el8.noarch

- python39-pulp-rpm-3.22.6-1.el8.noarch

- python39-pulpcore-3.28.19-1.el8.noarch

- rubygem-foreman-tasks-8.2.0-1.fm3_8.el8.noarch

- rubygem-foreman_ansible-12.0.7-1.fm3_8.el8.noarch

- rubygem-foreman_maintain-1.3.5-1.el8.noarch

- rubygem-foreman_openscap-7.0.0-2.fm3_8.el8.noarch

- rubygem-foreman_remote_execution-11.1.0-1.fm3_8.el8.noarch

- rubygem-hammer_cli-3.8.0-1.el8.noarch

- rubygem-hammer_cli_foreman-3.8.0-1.el8.noarch

- rubygem-hammer_cli_foreman_ansible-0.5.0-1.fm3_7.el8.noarch

- rubygem-hammer_cli_foreman_openscap-0.1.13-2.fm3_5.el8.noarch

- rubygem-hammer_cli_foreman_remote_execution-0.2.3-1.fm3_7.el8.noarch

- rubygem-hammer_cli_foreman_tasks-0.0.19-1.fm3_7.el8.noarch

- rubygem-hammer_cli_katello-1.11.0-0.1.pre.master.20230905173948git1fa5f0c.el8.noarch

- rubygem-katello-4.10.0-1.el8.noarch

- rubygem-pulp_ansible_client-0.18.0-1.el8.noarch

- rubygem-pulp_certguard_client-1.6.5-1.el8.noarch

- rubygem-pulp_container_client-2.15.2-1.el8.noarch

- rubygem-pulp_deb_client-3.0.0-1.el8.noarch

- rubygem-pulp_file_client-1.14.3-1.el8.noarch

- rubygem-pulp_ostree_client-2.1.1-1.el8.noarch

- rubygem-pulp_python_client-3.10.0-1.el8.noarch

- rubygem-pulp_rpm_client-3.22.3-1.el8.noarch

- rubygem-pulpcore_client-3.28.11-1.el8.noarch

- rubygem-smart_proxy_pulp-3.2.0-3.fm3_3.el8.noarch

Does this thread help?

For sure seen the thread but not really sure it is the same issue and also figured it was patched in 3.28.19 that I am running or is there an unpatched issue still?

I can confirm the fix that is meant to reslove the issue from that thread is in pulpcore 3.28.19 which is what you have got, so that should not be it. (Unless the fix does not always work).

Before the fix was ready, there was an alternative workaround, that consistent in reverting a certain commit. (The fix in pulpcore 3.28.19 does not do this). It might be worth applying that workaround one more time just to be sure this is not still the same issue.

This is the change that would need to be reverted: Enhance get_content() by omitting the version_membership lookup by lubosmj · Pull Request #4275 · pulp/pulpcore · GitHub

The other thing that might be worth testing is if a larger smart proxy sync is also much slower now.

A small repo going from 60 seconds to 8 Minutes could be a case of something like: “There is now an extra task that adds an extra fixed 7 Minutes to this action”. This would also not be great, but it would be different from: “All Syncs now take 10 times as long”. (As in a 1 hour sync now takes 10 hours).

Did some of suggested tests but did not have any affect.

I had backups of Foreman and 5 of my proxies with 3.71/4.9.2 so I rolled back the machines, synced all repos and then performed a simple test by just adding one package to one of our production repos and measure the speed the meta data is synced to my proxies all over the world.

se = sweden where the foreman server is located. latency ~3 ms

us = USA, latency ~111 ms

ca = Canada, latency ~177 ms

cl = Chile, latency ~276 ms

au = Australia, latency ~420 ms

With versions 3.8/4.10

Synchronize smart proxy 'seproxy'|stopped|success|December 03, 2023 at 10:25:31 PM 1 minute|

Synchronize smart proxy 'usproxy'|stopped|success|December 03, 2023 at 10:25:34 PM 5 minutes|

Synchronize smart proxy 'caproxy'|stopped|success|December 03, 2023 at 10:25:31 PM 6 minutes|

Synchronize smart proxy 'clproxy'|stopped|success|December 03, 2023 at 10:25:32 PM 9 minutes|

Synchronize smart proxy 'auproxy'|stopped|success|December 03, 2023 at 10:25:35 PM 13 minutes|

With versions 3.71/4.9.2

Synchronize smart proxy 'seproxy' stopped success December 09, 2023 at 09:51:08 PM 5 seconds

Synchronize smart proxy 'usproxy' stopped success December 09, 2023 at 09:51:08 PM 17 seconds

Synchronize smart proxy 'caproxy' stopped success December 09, 2023 at 09:51:06 PM 25 seconds

Synchronize smart proxy 'clproxy' stopped success December 09, 2023 at 09:51:07 PM 36 seconds

Synchronize smart proxy 'auproxy' stopped success December 09, 2023 at 09:51:09 PM 50 seconds

As you can see the performance for 3.8/4.10 is horrific. Each time a package is released in our production repos, the sync is crazy slow and stacks up when several packages + syncs runs quickly after each other. Sadly I did not have full backup of all proxies so I guess to be able to go back to 3.71/4.9.2 on all proxies I need to redeploy 50% of my proxies unless there is a good way to downgrade a proxy…

Installed packages on the 3.71/4.9.2 Forman server.

Installed Packages

ansible-collection-theforeman-foreman-3.13.0-1.el8.noarch

ansiblerole-foreman_scap_client-0.2.0-2.el8.noarch

candlepin-4.3.1-1.el8.noarch

candlepin-selinux-4.3.1-1.el8.noarch

foreman-3.7.1-1.el8.noarch

foreman-cli-3.7.1-1.el8.noarch

foreman-debug-3.7.1-1.el8.noarch

foreman-dynflow-sidekiq-3.7.1-1.el8.noarch

foreman-installer-3.7.1-1.el8.noarch

foreman-installer-katello-3.7.1-1.el8.noarch

foreman-obsolete-packages-1.5-1.el8.noarch

foreman-postgresql-3.7.1-1.el8.noarch

foreman-proxy-3.7.1-1.el8.noarch

foreman-release-3.7.1-1.el8.noarch

foreman-selinux-3.7.1-1.el8.noarch

foreman-service-3.7.1-1.el8.noarch

katello-4.9.2-1.el8.noarch

katello-certs-tools-2.9.0-2.el8.noarch

katello-client-bootstrap-1.7.9-2.el8.noarch

katello-common-4.9.2-1.el8.noarch

katello-debug-4.9.2-1.el8.noarch

katello-repos-4.9.2-1.el8.noarch

katello-selinux-5.0.2-1.el8.noarch

pulpcore-selinux-1.3.2-1.el8.x86_64

python39-pulp-ansible-0.16.0-1.el8.noarch

python39-pulp-certguard-1.5.6-1.el8.noarch

python39-pulp-cli-0.14.0-4.el8.noarch

python39-pulp-container-2.14.7-1.el8.noarch

python39-pulp-deb-2.20.4-1.el8.noarch

python39-pulp-file-1.12.0-1.el8.noarch

python39-pulp-python-3.8.0-1.el8.noarch

python39-pulp-rpm-3.19.9-1.el8.noarch

python39-pulpcore-3.22.15-1.el8.noarch

qpid-proton-c-0.37.0-1.el8.x86_64

rubygem-foreman-tasks-8.1.1-1.fm3_7.el8.noarch

rubygem-foreman_ansible-12.0.6-1.fm3_7.el8.noarch

rubygem-foreman_maintain-1.3.5-1.el8.noarch

rubygem-foreman_openscap-7.0.0-1.fm3_7.el8.noarch

rubygem-foreman_remote_execution-10.1.0-1.fm3_7.el8.noarch

rubygem-hammer_cli-3.7.0-1.el8.noarch

rubygem-hammer_cli_foreman-3.7.0-1.el8.noarch

rubygem-hammer_cli_foreman_ansible-0.5.0-1.fm3_7.el8.noarch

rubygem-hammer_cli_foreman_openscap-0.1.13-2.fm3_5.el8.noarch

rubygem-hammer_cli_foreman_remote_execution-0.2.3-1.fm3_7.el8.noarch

rubygem-hammer_cli_foreman_tasks-0.0.19-1.fm3_7.el8.noarch

rubygem-hammer_cli_katello-1.9.1-1.el8.noarch

rubygem-katello-4.9.2-1.el8.noarch

rubygem-pulp_ansible_client-0.16.1-1.el8.noarch

rubygem-pulp_certguard_client-1.6.5-1.el8.noarch

rubygem-pulp_container_client-2.14.5-1.el8.noarch

rubygem-pulp_deb_client-2.20.2-1.el8.noarch

rubygem-pulp_file_client-1.12.0-1.el8.noarch

rubygem-pulp_ostree_client-2.0.0-1.el8.noarch

rubygem-pulp_python_client-3.8.0-1.el8.noarch

rubygem-pulp_rpm_client-3.19.6-1.el8.noarch

rubygem-pulpcore_client-3.22.4-1.el8.noarch

rubygem-qpid_proton-0.37.0-1.el8.x86_64

rubygem-smart_proxy_pulp-3.2.0-3.fm3_3.el8.noarch

@iballou Is this being looked into?

Is there anyone else I should ping?

@tedevil can you show us what actions in the Dynflow console for your smart proxy sync task are taking all of the time? I’m assuming it’s the actual Pulp 3 content sync but we need to verify that first.

From version 3.8/4.10.

Taking it from the slowest sync in Australia…

3: Actions::Pulp3::ContentGuard::Refresh (success) [ 2.74s / 2.74s ]

5: Actions::Pulp3::Orchestration::Repository::RefreshRepos (success) [ 738.17s / 738.17s ]

7: Actions::Katello::CapsuleContent::SyncCapsule (success) [ 4.50s / 4.50s ]

9: Actions::Pulp3::CapsuleContent::Sync (success) [ 23.43s / 16.95s ]

11: Actions::Pulp3::CapsuleContent::GenerateMetadata (success) [ 2.70s / 2.70s ]

13: Actions::Pulp3::CapsuleContent::RefreshDistribution (success) [ 9.64s / 9.64s ]

And here is one proxy that is in the same site:

3: Actions::Pulp3::ContentGuard::Refresh (success) [ 0.51s / 0.51s ]

5: Actions::Pulp3::Orchestration::Repository::RefreshRepos (success) [ 78.03s / 78.03s ]

7: Actions::Katello::CapsuleContent::SyncCapsule (success) [ 0.49s / 0.49s ]

9: Actions::Pulp3::CapsuleContent::Sync (success) [ 2.37s / 0.81s ]

11: Actions::Pulp3::CapsuleContent::GenerateMetadata (success) [ 0.25s / 0.25s ]

13: Actions::Pulp3::CapsuleContent::RefreshDistribution (success) [ 0.72s / 0.72s ]

It’s very interesting that RefreshRepos is taking all the time and it’s not even the sync. All it really does is create repositories and remote.

@tedevil are you able to share the raw output in Dynflow for Actions::Pulp3::Orchestration::Repository::RefreshRepos ?

That would show us how long the specific Pulp tasks are taking.

If the tasks themselves aren’t taking up the time, then perhaps the amount of API queries increased.

If it’s not a Pulp task taking up all the time, we might need to dig into /var/log/foreman/production.log and /var/log/messages on the Foreman and /var/log/messages on the Smart Proxy to see what specifically is taking up so much time.

Here is the Dynflow attached as a log.

5.Actions.Pulp3.Orchestration.Repository.RefreshRepos.log (84.7 KB)

Looking back in time to on a similar sync task in 3.71/4.9.2 there is only one “pulp_href” in the pulp task compared to 156 of them in 3.8/4.10.

Seems to match the number of repositories I have (156).

Ex.

5: Actions::Pulp3::Orchestration::Repository::RefreshRepos"

5: Actions::Pulp3::Orchestration::Repository::RefreshRepos (success) [ 13.40s / 13.40s ]

Queue: default

Started at: 2023-11-09 13:22:27 UTC

Ended at: 2023-11-09 13:22:40 UTC

Real time: 13.40s

Execution time (excluding suspended state): 13.40s

Input:

---

smart_proxy_id: 7

environment_id:

content_view_id:

repository_id: 33

remote_user: admin

remote_cp_user: admin

current_request_id: ba96c9e5-3a7f-4a6b-8fb8-fa883cf1448f

current_timezone: UTC

current_organization_id:

current_location_id:

current_user_id: 6

Output:

---

pulp_tasks:

- pulp_href: "/pulp/api/v3/tasks/fe333043-8ab1-4f80-b8bb-6879540b19a6/"

pulp_created: '2023-11-09T13:22:39.062+00:00'

state: completed

name: pulpcore.app.tasks.base.general_update

logging_cid: ba96c9e5-3a7f-4a6b-8fb8-fa883cf1448f

started_at: '2023-11-09T13:22:39.110+00:00'

finished_at: '2023-11-09T13:22:39.133+00:00'

worker: "/pulp/api/v3/workers/e486bd25-9a8c-408b-b278-f1985a761625/"

child_tasks: []

progress_reports: []

created_resources: []

reserved_resources_record:

- "/pulp/api/v3/remotes/rpm/rpm/fec7c69c-8bbd-4b52-9ab9-df39c16cbbfa/"

task_groups: []

Chunked output:

--- []

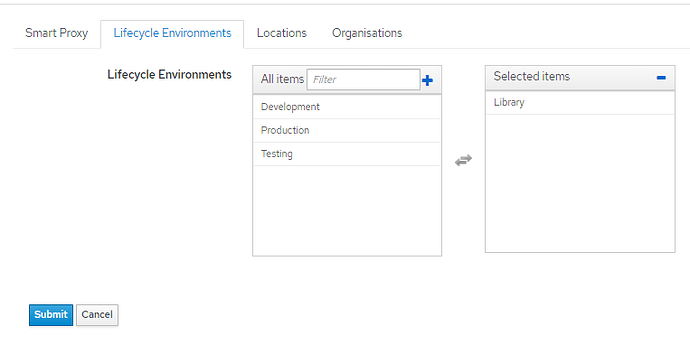

Also want to add that the setup for all proxies is to sync the “Lifecycle Environments” “Library” only.

Seeing this as well. The question is does the updated Pulp 3.39, that is in Foreman 3.9/Katello 4.11 fix this issue?

Unsure if this issue is really known at this point. Not really have the right skillset to debug this on my own. I can deliver any logs or perform tests. I do not have any “test” system either to validate if the new version would fix anything. Any release notes for Pulp 3.39 saying anything related?

If pulpcore 3.39 did fix this issue, then that would be a “happy accident”. AFAIK the Pulp team is not currently aware of any performance regressions in the versions that ship with Katello 4.10 (other than having been told about the existence of this thread).

The last big performance issue from the other thread should be fixed in the Pulp versions that ship with Katello 4.10. As we learned from that issue, performance issues are sometimes dependent on the postgresql version used, and the Pulp team generally tests with a newer postgresql version than the one shipped on El8 (and by extension what is used by Foreman/Katello). As a result it is entirely possible that you have hit a performance issue that the Pulp team is not aware of from their own testing.

If you did want to dig through the Pulp release notes, see: Changelog — Pulp Project 3.44.0.dev documentation

Note that “Resolved a sync-time performance regression. #4591” is the fix that is already included with Katello 4.10.

Syncing one repository really shouldn’t be causing all repositories on the system to be refreshed, so this might be something looked into in Katello rather than Pulp.

If you sync a repo, for example, Katello is suppose to only interact with that one repo rather than all of them.

I noticed this in your Dynflow output:

environment_id:

content_view_id:

repository_id:

What sort of smart proxy sync was this? Was it a normal smart proxy sync, or was it triggered by a content view publish or a sync?

Also, I’ve created a bug so we don’t lose this: Bug #36990: Investigation: RefreshRepos from a repository sync causes updates of all repositories on smart proxy - Katello - Foreman

Basically it is triggered by a repo sync. The way we have it setup is.

- Devs are pushing the rpm to a local repository.

- The repository see a new package arrive and using the Foreman API to trigger a repo sync of the local repo.

- Foreman/Katello start the repo sync and finds a the new rpm in our local repo and then triggers a “Sync Repository on Smart Proxy(ies)” task that sync the new meta data to all proxies since the Library is configured in the Lifecycle Environment configurations for all proxies.

The same flow is triggered also when we release new docker images in our local registry.

Also note that the API call to sync the local repo initially failed after upgrading to 3.8 and only after adding the resource “Smart proxy: manage_capsule_content” to the role it again started to work. Seen in this thread → No sync to Proxies after docker repo updated - #2 by lfu

That issue could be unrelated to this one though.