Hey,

I am on Satellite 6.10 and I have found out that what previously worked fine on a host with 12GB no longer works fine and I am confident that setups with 16GB RAM might have similar problems. I just noticed that minimum memory amount is now 20GB RAM.

Pulp3 has the following services:

- pulpcore-worker - spawns a single process, but installer sets up N of instances of this systemd unit according to available cores (4 in my case)

- pulpcore-content - spawns 9 worker processes (hardcoded in systemd unit)

- pulpcore-api - spawns 5 worker processes (hardcoded)

Now, imagine a system with 4 cores and 16 GB RAM, not an atypical VM to run Katello. We have 14 gunicorn processes with RES memory 100MB each which is 1.4 GB just after restart (no work done yet). The other pulpcore-workers only take 30MB of memory each but once they start syncing repositories, it quickly goes up to 2+ GB per process in my case. For four cores that’s 8 GB. Unfortunately also pulpcore-api goes up after content is being synced, up to 1 GB per process. This is caused likely by Katello hammering the API endpoint to get some data.

If you add add Candlepin of top of that which is 1GB RES MEM and puppet master which is also 1GB RES. Then we have RoR processes typically 500MB per core that is 2 GB. And dynflow / sidekiq 500MB each so 2 GB.

Roughly speaking that is: 2 + 2 + 1 + 1 + 1.5 + 8 = 15.5 GB memory just when you sync some data and pulpcore-workers kick in. It gets worse because pulpcore-content processes can go up to 1 GB each after a simple sync of a single RHEL repo, there is 9 of them, this can go wild rather quickly. And we are speaking just about clean installation with just few repositories synced. I have’t even tested things under client load (rhsm, content fetching).

What I am trying to say is I feel like the defaults we ship 6.10 with, and I am extrapolating this to Katello, is way too heavy on memory.

One additional observation: once pulpcore-workers are done with sync action, they just hang with all the allocated memory. Gunicorn appears to support restarting workers via SIGHUP so there is some infrastructure for worker recycling, but I am not sure if this is implemented in Pulp in any way.

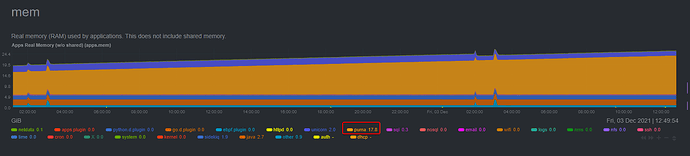

Edit: For the record, we are seeing memory spikes also on the Puma processes and they go wild (up to 15GB per process): Katello 4.2.x repository synchronisation issues