Problem:

We are using the Foreman Proxy Redfish integration to access BMC interfaces of Dell servers as the “normal” IPMI protocol has known vulnerabilities.

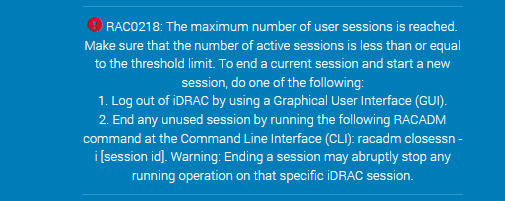

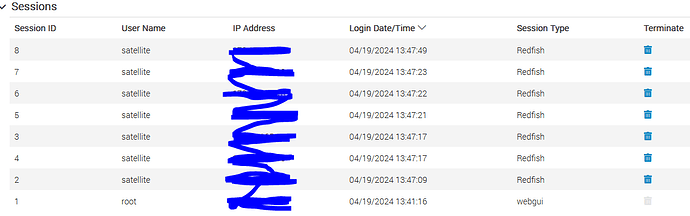

This results in Foreman Proxy being unable to connect to the system because the connections are opened but not properly closed afterwards.

This also causes administrators to not being able to access the BMC interface as all available sessions are in use by the foreman BMC user (using redfish).

Expected outcome:

Redfish works fine and does open and close connections fine.

Foreman and Proxy versions:

Foreman 2.3 / 3.1

Foreman Proxy 2.3 / 3.1

→ yes, the Foreman version is pretty old but the code did not change between 2.3 and the current release.

So I assume, the problem still exists in the latest Foreman(-proxy) release.

Foreman and Proxy plugin versions:

n/a

Distribution and version:

RHEL 7.9

Other relevant data:

The following output is shown when using racadm (CLI admin tool for iDRAC):

[root@a1f755a7a94d srvadmin]# ./bin/idracadm7 -r <bmc ip> -u foreman -p $PASS getssninfo

Continuing execution. Use -S option for racadm to stop execution on certificate-related errors.

SSNID Type User IP Address Login Date/Time

---------------------------------------------------------------------------

47 REDFISH foreman <foremansp IP> 09/23/2022 13:53:50

48 REDFISH foreman <foremansp IP> 09/23/2022 13:53:54

49 REDFISH foreman <foremansp IP> 09/23/2022 13:53:56

50 REDFISH foreman <foremansp IP> 09/23/2022 13:53:58

51 REDFISH foreman <foremansp IP> 09/23/2022 13:54:00

52 REDFISH foreman <foremansp IP> 09/23/2022 13:54:01

56 REDFISH foreman <foremansp IP> 09/23/2022 13:55:31

57 REDFISH foreman <foremansp IP> 09/23/2022 13:55:32

62 RACADM foreman <foremansp IP> 09/23/2022 14:07:57

You can see that the first bunch of connections were opened in a short time window (47-52). The second bunch (56 and 57) later. In both times, the host was viewed in Foreman itself (which results in power state and so on being retrieved).

As the limit is reached, the Foreman Smartproxy also shows an error:

2022-09-23T13:55:44 6e91b9df [W] Error details for Invalid credentials: <RedfishClient::Connector::AuthError>: Invalid credentials

/opt/theforeman/tfm/root/usr/share/gems/gems/redfish_client-0.5.2/lib/redfish_client/connector.rb:249:in `raise_invalid_auth_error'

/opt/theforeman/tfm/root/usr/share/gems/gems/redfish_client-0.5.2/lib/redfish_client/connector.rb:220:in `session_login'

/opt/theforeman/tfm/root/usr/share/gems/gems/redfish_client-0.5.2/lib/redfish_client/connector.rb:171:in `login'

/opt/theforeman/tfm/root/usr/share/gems/gems/redfish_client-0.5.2/lib/redfish_client/root.rb:56:in `login'

/usr/share/foreman-proxy/modules/bmc/redfish.rb:20:in `connect'

/usr/share/foreman-proxy/modules/bmc/base.rb:6:in `initialize'

/usr/share/foreman-proxy/modules/bmc/redfish.rb:14:in `initialize'

/usr/share/foreman-proxy/modules/bmc/bmc_api.rb:432:in `new'

/usr/share/foreman-proxy/modules/bmc/bmc_api.rb:432:in `bmc_setup'

/usr/share/foreman-proxy/modules/bmc/bmc_api.rb:66:in `block in <class:Api>'

/opt/theforeman/tfm/root/usr/share/gems/gems/sinatra-2.0.3/lib/sinatra/base.rb:1635:in `call'

/opt/theforeman/tfm/root/usr/share/gems/gems/sinatra-2.0.3/lib/sinatra/base.rb:1635:in `block in compile!'

/opt/theforeman/tfm/root/usr/share/gems/gems/sinatra-2.0.3/lib/sinatra/base.rb:992:in `block (3 levels) in route!'

/opt/theforeman/tfm/root/usr/share/gems/gems/sinatra-2.0.3/lib/sinatra/base.rb:1011:in `route_eval'

/opt/theforeman/tfm/root/usr/share/gems/gems/sinatra-2.0.3/lib/sinatra/base.rb:992:in `block (2 levels) in route!'

/opt/theforeman/tfm/root/usr/share/gems/gems/sinatra-2.0.3/lib/sinatra/base.rb:1040:in `block in process_route'

/opt/theforeman/tfm/root/usr/share/gems/gems/sinatra-2.0.3/lib/sinatra/base.rb:1038:in `catch'

/opt/theforeman/tfm/root/usr/share/gems/gems/sinatra-2.0.3/lib/sinatra/base.rb:1038:in `process_route'

/opt/theforeman/tfm/root/usr/share/gems/gems/sinatra-2.0.3/lib/sinatra/base.rb:990:in `block in route!'

/opt/theforeman/tfm/root/usr/share/gems/gems/sinatra-2.0.3/lib/sinatra/base.rb:989:in `each'

/opt/theforeman/tfm/root/usr/share/gems/gems/sinatra-2.0.3/lib/sinatra/base.rb:989:in `route!'

/opt/theforeman/tfm/root/usr/share/gems/gems/sinatra-2.0.3/lib/sinatra/base.rb:1097:in `block in dispatch!'

/opt/theforeman/tfm/root/usr/share/gems/gems/sinatra-2.0.3/lib/sinatra/base.rb:1076:in `block in invoke'

/opt/theforeman/tfm/root/usr/share/gems/gems/sinatra-2.0.3/lib/sinatra/base.rb:1076:in `catch'

/opt/theforeman/tfm/root/usr/share/gems/gems/sinatra-2.0.3/lib/sinatra/base.rb:1076:in `invoke'

/opt/theforeman/tfm/root/usr/share/gems/gems/sinatra-2.0.3/lib/sinatra/base.rb:1094:in `dispatch!'

/opt/theforeman/tfm/root/usr/share/gems/gems/sinatra-2.0.3/lib/sinatra/base.rb:924:in `block in call!'

/opt/theforeman/tfm/root/usr/share/gems/gems/sinatra-2.0.3/lib/sinatra/base.rb:1076:in `block in invoke'

/opt/theforeman/tfm/root/usr/share/gems/gems/sinatra-2.0.3/lib/sinatra/base.rb:1076:in `catch'

/opt/theforeman/tfm/root/usr/share/gems/gems/sinatra-2.0.3/lib/sinatra/base.rb:1076:in `invoke'

/opt/theforeman/tfm/root/usr/share/gems/gems/sinatra-2.0.3/lib/sinatra/base.rb:924:in `call!'

/opt/theforeman/tfm/root/usr/share/gems/gems/sinatra-2.0.3/lib/sinatra/base.rb:913:in `call'

/usr/share/foreman-proxy/lib/proxy/log.rb:105:in `call'

/usr/share/foreman-proxy/lib/proxy/request_id_middleware.rb:11:in `call'

/opt/theforeman/tfm/root/usr/share/gems/gems/rack-protection-2.0.3/lib/rack/protection/xss_header.rb:18:in `call'

/opt/theforeman/tfm/root/usr/share/gems/gems/rack-protection-2.0.3/lib/rack/protection/path_traversal.rb:16:in `call'

/opt/theforeman/tfm/root/usr/share/gems/gems/rack-protection-2.0.3/lib/rack/protection/json_csrf.rb:26:in `call'

/opt/theforeman/tfm/root/usr/share/gems/gems/rack-protection-2.0.3/lib/rack/protection/base.rb:50:in `call'

/opt/theforeman/tfm/root/usr/share/gems/gems/rack-protection-2.0.3/lib/rack/protection/base.rb:50:in `call'

/opt/theforeman/tfm/root/usr/share/gems/gems/rack-protection-2.0.3/lib/rack/protection/frame_options.rb:31:in `call'

/opt/theforeman/tfm/root/usr/share/gems/gems/rack-2.2.3/lib/rack/null_logger.rb:11:in `call'

/opt/theforeman/tfm/root/usr/share/gems/gems/rack-2.2.3/lib/rack/head.rb:12:in `call'

/opt/theforeman/tfm/root/usr/share/gems/gems/sinatra-2.0.3/lib/sinatra/show_exceptions.rb:22:in `call'

/opt/theforeman/tfm/root/usr/share/gems/gems/sinatra-2.0.3/lib/sinatra/base.rb:194:in `call'

/opt/theforeman/tfm/root/usr/share/gems/gems/sinatra-2.0.3/lib/sinatra/base.rb:1958:in `call'

/opt/theforeman/tfm/root/usr/share/gems/gems/sinatra-2.0.3/lib/sinatra/base.rb:1502:in `block in call'

/opt/theforeman/tfm/root/usr/share/gems/gems/sinatra-2.0.3/lib/sinatra/base.rb:1729:in `synchronize'

/opt/theforeman/tfm/root/usr/share/gems/gems/sinatra-2.0.3/lib/sinatra/base.rb:1502:in `call'

/opt/theforeman/tfm/root/usr/share/gems/gems/rack-2.2.3/lib/rack/urlmap.rb:74:in `block in call'

/opt/theforeman/tfm/root/usr/share/gems/gems/rack-2.2.3/lib/rack/urlmap.rb:58:in `each'

/opt/theforeman/tfm/root/usr/share/gems/gems/rack-2.2.3/lib/rack/urlmap.rb:58:in `call'

/opt/theforeman/tfm/root/usr/share/gems/gems/rack-2.2.3/lib/rack/builder.rb:244:in `call'

/opt/theforeman/tfm/root/usr/share/gems/gems/rack-2.2.3/lib/rack/handler/webrick.rb:95:in `service'

/opt/rh/rh-ruby25/root/usr/share/ruby/webrick/httpserver.rb:140:in `service'

/opt/rh/rh-ruby25/root/usr/share/ruby/webrick/httpserver.rb:96:in `run'

/opt/rh/rh-ruby25/root/usr/share/ruby/webrick/server.rb:307:in `block in start_thread'

/opt/theforeman/tfm/root/usr/share/gems/gems/logging-2.3.0/lib/logging/diagnostic_context.rb:474:in `block in create_with_logging_context'