If I’m allowed, I had also something in mind how the whole Ansible part could work in the future.

Pretty similar to what ekohl, has already written, only with a few key differences.

The biggest problem with Ansible Environments right now is the updating part,

due to that the whole project (and also our company use) moves/d more and more to only use Execution Environments, because they can somewhat reliably be built.

And then you can either directly use these EEs, or use the container image as base, aka init-container. Directly using is of course faster.

Then the whole execution part can either be run in AWX (AAP) or ansible-navigator (which is basically a pretty advanced wrapper around podman/docker + several ansible-* commands running in the container + a TUI that can be proud of itself).

Maybe there are even more options, but these 2 are at least the once I’m aware of.

And then if you want to run playbooks instead directly attaching roles (i.e. Application-centric deployment does that if I remember correctly), the code needs to be cloned somewhere first. Somewhere could be outside of the container, to keep it cached, or inside.

If you are using roles (roles in collections) you don’t really need them cached, they just need to be part of the EE, either already in there or added in the init process.

(guess this little part was the actual topic…)

When everything around already takes care of the environment, then cloning comes really down to just a <source-control> clone. Here not to forget how to clone, without authentication, with Basic authentication, with SSH authentication. (and if these parameters are controlled by the clone or by the environment)

Here then comes the execution-environment.yml and requirements.yml (and maybe even a bindep.txt (system) and a requirements.txt (pip)) into the play, these would be necessary for the init process path.

When these would be in the repo of whole execution, then it will have to be cloned twice (if not even always twice, because Foreman needs to know the roles and variables…)

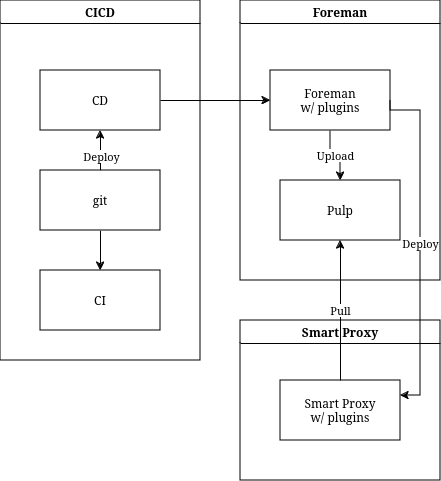

And then you can get the Execution Environment image either from the Smart Proxies or from an external Container Registry.

Finally, to have it running somewhere.

Personally I would prefer if it could do all of that via AWX (AAP) because then the resource management gets handled by AWX, could be in one environment could be in a different one, could be a remote agent connected to AWX. But then this whole thing needs to be able to talk via the AWX API.

The other 2 options are having Podman installed on the Master/Smart Proxies and running it there or letting REX talk with a different host which acts as an Execution node.

Which tool is actually used, could or might even should work similar to the normal prioritization schema, just: Global Settings → Smart Proxy → Organization → Location → Domain → Host Group → FQDN

(Priority configurable if necessary) to make the configuration overhead smaller.

So, this basically gives you multiple options that can be combined, a multi-dimensional matrix of:

- optionally, cloning

- Predefined EE or EE with added content in the init process

- optionally, adding requirements for the init process

- EE image source Katello managed or external

- Running the EE directly on the Master/Smart Proxy, Running REX to other defined host to run the EE there, send commands to AWX API to run the EE there

The most similar thing would be ansible-galaxy collection install ..., but everything more sophisticated can only be found directly built into ansible-builder or AWX itself (as far as I’m aware of)

Totally agree, making it generic for all different solutions is hard.

Speaking from our uses right now, we use Ansible and Salt.

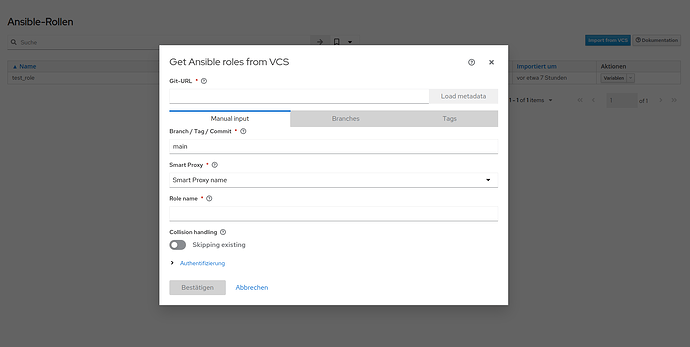

Ansible get pushed as Galaxy artifact, that gets rather half-automated installed via the before mentioned ansible-galaxy collection install command and then a import triggered in the UI to get the variables.

Salt on the other hand, we push that with a deployment agent to a NFS share, which is directly hooked in the Master (and then manually import triggered in the UI).

Ansible needs for us the install of the dependencies, Salt not.

(also I would have done already more than just writing about it, but my coding skill didn’t reach the needed level yet  )

)

(I’m sorry that kinda got a lot)