Problem:

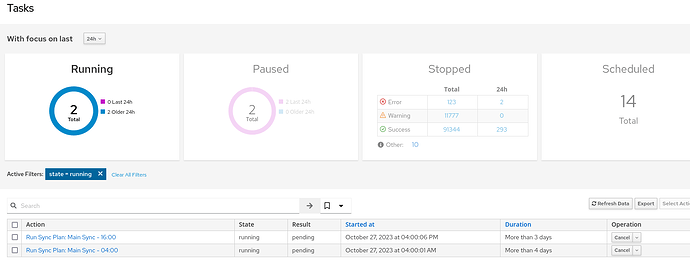

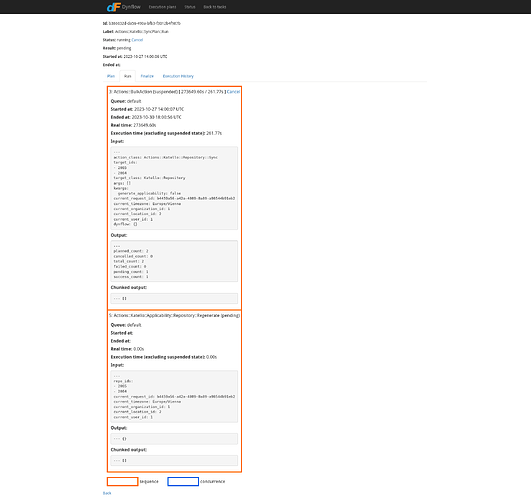

Noticed this morning, that since the update the sync plans with failing repo sync get stuck:

in this case it’s the Zabbix repos which sometimes just block off.

If I try to cancel them, the action tells me it cancelled, but actually nothing happened. (cancelling in the DynFlow console also doesn’t to much)

Manually syncing the repos works without any issues, but any other recurring task of these sync plans of course fail now.

Force cancelled one of them for now, but not sure if this was a good idea.

Thanks, lumarel

Foreman and Proxy versions:

Foreman 3.8

Katello 4.10

Foreman and Proxy plugin versions:

VMware

Tasks

Ansible

Bootdisk

Puppet

Snapshot Management

Statistics

Templates

Distribution and version:

Rocky Linux 8.8

foreman-tail output:

from the cancelling:

==> /var/log/httpd/foreman-ssl_access_ssl.log <==

10.27.10.200 - - [30/Oct/2023:19:13:11 +0100] "POST /foreman_tasks/tasks/74c3fdb9-fefa-4a8b-b529-44a799d2f248/cancel HTTP/2.0" 200 19 "https://foreman.fritz.box/foreman_tasks/tasks?state=running&page=1" "Mozilla/5.0 (X11; Linux x86_64; rv:109.0) Gecko/20100101 Firefox/115.0"

10.27.10.200 - - [30/Oct/2023:19:13:12 +0100] "GET /foreman_tasks/api/tasks?search=%28state%3Drunning%29&page=1&include_permissions=true HTTP/2.0" 200 1876 "https://foreman.fritz.box/foreman_tasks/tasks?state=running&page=1" "Mozilla/5.0 (X11; Linux x86_64; rv:109.0) Gecko/20100101 Firefox/115.0"

10.27.10.200 - - [30/Oct/2023:19:13:12 +0100] "GET /foreman_tasks/tasks/summary/24 HTTP/2.0" 304 - "https://foreman.fritz.box/foreman_tasks/tasks?state=running&page=1" "Mozilla/5.0 (X11; Linux x86_64; rv:109.0) Gecko/20100101 Firefox/115.0"

==> /var/log/foreman/production.log <==

2023-10-30T19:13:11 [I|app|32b4e21b] Started POST "/foreman_tasks/tasks/74c3fdb9-fefa-4a8b-b529-44a799d2f248/cancel" for 10.27.10.200 at 2023-10-30 19:13:11 +0100

2023-10-30T19:13:11 [I|app|32b4e21b] Processing by ForemanTasks::TasksController#cancel as JSON

2023-10-30T19:13:11 [I|app|32b4e21b] Parameters: {"id"=>"74c3fdb9-fefa-4a8b-b529-44a799d2f248", "task"=>{}}

2023-10-30T19:13:11 [I|app|32b4e21b] Completed 200 OK in 10ms (Views: 0.2ms | ActiveRecord: 1.3ms | Allocations: 4803)

2023-10-30T19:13:12 [I|app|4b4271f0] Started GET "/foreman_tasks/api/tasks?search=%28state%3Drunning%29&page=1&include_permissions=true" for 10.27.10.200 at 2023-10-30 19:13:12 +0100

2023-10-30T19:13:12 [I|app|77cb9f7a] Started GET "/foreman_tasks/tasks/summary/24" for 10.27.10.200 at 2023-10-30 19:13:12 +0100

2023-10-30T19:13:12 [I|app|4b4271f0] Processing by ForemanTasks::Api::TasksController#index as JSON

2023-10-30T19:13:12 [I|app|4b4271f0] Parameters: {"search"=>"(state=running)", "page"=>"1", "include_permissions"=>"true"}

2023-10-30T19:13:12 [I|app|77cb9f7a] Processing by ForemanTasks::TasksController#summary as JSON

2023-10-30T19:13:12 [I|app|77cb9f7a] Parameters: {"recent_timeframe"=>"24"}

2023-10-30T19:13:12 [I|app|4b4271f0] Rendered /usr/share/gems/gems/foreman-tasks-8.2.0/app/views/foreman_tasks/api/tasks/index.json.rabl within api/v2/layouts/index_layout (Duration: 24.0ms | Allocations: 17924)

2023-10-30T19:13:12 [I|app|4b4271f0] Rendered layout api/v2/layouts/index_layout.json.erb (Duration: 41.0ms | Allocations: 24056)

2023-10-30T19:13:12 [I|app|4b4271f0] Completed 200 OK in 48ms (Views: 27.2ms | ActiveRecord: 15.1ms | Allocations: 26923)

2023-10-30T19:13:12 [I|app|77cb9f7a] Completed 200 OK in 434ms (Views: 0.3ms | ActiveRecord: 426.0ms | Allocations: 3541)

==> /var/log/httpd/foreman-ssl_access_ssl.log <==

10.27.10.200 - - [30/Oct/2023:19:13:12 +0100] "GET /notification_recipients HTTP/2.0" 304 - "https://foreman.fritz.box/foreman_tasks/tasks?state=running&page=1" "Mozilla/5.0 (X11; Linux x86_64; rv:109.0) Gecko/20100101 Firefox/115.0"

==> /var/log/foreman/production.log <==

2023-10-30T19:13:12 [I|app|1817a609] Started GET "/notification_recipients" for 10.27.10.200 at 2023-10-30 19:13:12 +0100

2023-10-30T19:13:12 [I|app|1817a609] Processing by NotificationRecipientsController#index as JSON

2023-10-30T19:13:13 [I|app|1817a609] Completed 200 OK in 7ms (Views: 0.1ms | ActiveRecord: 1.0ms | Allocations: 1966)