Were looking to support Highly Available Smart Proxies in a future release of Foreman and plugins. We have some up with what we think is a good proposal, but would like you, the users, input before we go ahead with this.

Please express any question or concerns below!

The Problem

Today Hosts & Subnets are assigned a Smart Proxy for every feature; you select a Smart Proxy for Puppet on a Host and a DHCP Proxy on a Subnet for example. This means that when you create a host for every feature Foreman is going to communicate to a single Smart Proxy to do something for that feature & host/subnet combination.

The Scope

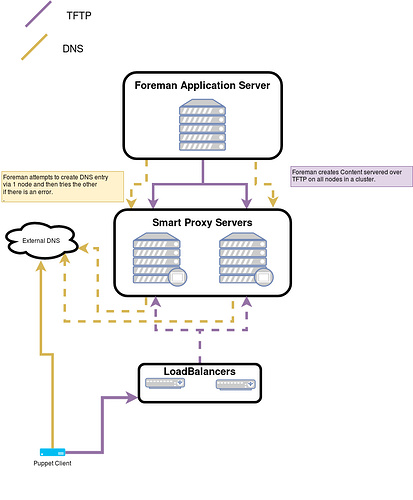

They say a picture speaks 1000 words…

This just shows 2 features, but we would be supporting more features, they generally fit into 2 categories:

Yellow: Foreman must do something on 1 of the Smart Proxies, it would try one, if that fails try the next.

Purple: Foreman must do something on all Smart Proxies in a Cluster.

Yellow would be features like DNS, Realm, Puppet CA ect…

Purple would be features like Content (Katello), TFTP, ect…

The Proposal

Create a new Smart Proxy Pool object, this would hold a Name (used for reference, like other things within Foreman) and a Hostname (used for client communication) attributes. It would also be assignable to Smart Proxies and Hosts/Subnets. So when you create a new Host or Subnet, you would select a Smart Proxy Pool for each Feature instead of the current Smart Proxy selection.

There would be no limit on the amount of Pools a Smart Proxy is part of.

The current use-case still works by using a Smart Proxy Pool including just 1 Smart Proxy, with the Smart Proxy URL set too http://proxy.example.com:8443 and the Smart Proxy Pool Hostname set to proxy.example.com **

A new use-case will work where Foreman can connect via one interface (or hostname/url) and client connects via another. Using a Smart Proxy Pool including just 1 Smart Proxy with the Smart Proxy URL set too http://proxy.example.com:8443 and the Smart Proxy Pool Hostname set to client-proxy-name.example.com. You could also create a new Smart Proxy Pool per network (or interface) the Smart Proxy is serving.

You can make your Smart Proxies active/active by using a Smart Proxy Pool with 2 (or maybe more) Smart Proxies with the Smart Proxy Pool Hostname set to your load balancer.

Some real world examples:

- When a Smart Proxy Pool is selected for DNS and a Host created,

- With 1 Smart Proxy assigned:

Foreman would attempt create the DNS record using that 1 Smart Proxy, host building would fail it that doesn’t work. - With 2 Smart Proxies assigned:

Foreman would attempt to create the DNS record using 1 of the Smart Proxies, it would then try the other if that fails.

- With 1 Smart Proxy assigned:

- When a Smart Proxy Pool is selected for TFTP and a Host created,

- With 1 Smart Proxy assigned:

Foreman would copy the TFTP Content to 1 Smart Proxy. - With 2 Smart Proxies assigned:

Foreman would copy the TFTP Content to 2 Smart Proxies, when the client boots it would use the Smart Proxy Pool Hostname to grab content via TFTP. (this should be set to the Load Balancer you are using)

- With 1 Smart Proxy assigned:

- When assigning 2 Smart Proxies to a Smart Proxy Pool with Katello’s Content feature we would verify the Smart Proxies are in the same organizations, locations & lifecycle environments.

As part of the upgrade, we would create a Smart Proxy Pool for every Smart Proxy and also Hosts/Subnet Feature associations would be moved to Smart Proxy Pools(see ** above). Obviously there are more features where this would be very useful, especially with ones plugins provide, the ones uses above are just examples ![]()

How does the use of Smart Proxy Pools sounds to you? Do you have any concerns?