Problem:

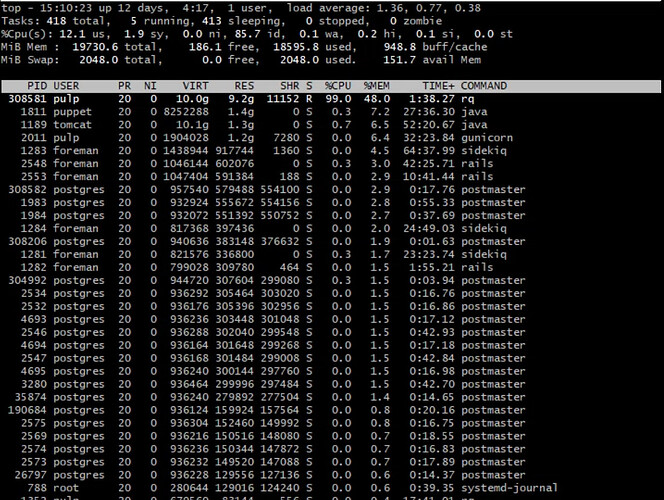

When Katello is synchronising a large Yum repository (Oracle Linux 7 ‘latest’), the server runs out of memory, and the ‘rq’ process is killed by the OOM killer

Expected outcome:

The sync process works without using as much memory as it does.

Foreman and Proxy versions:

foreman-2.4.0-0.4.rc2.el8.noarch

Foreman and Proxy plugin versions:

katello-4.0.0-0.9.rc2.el8.noarch

Distribution and version:

Oracle Linux 8.3

Other relevant data:

The ‘rq’ process which is performing the sync reaches ~9.3GiB of RAM usage, on the 20GiB VM, and the OOM killer kills said process, so that the sync does not complete.

Hi @John_Beranek,

Can we see your Pulp versions? pip3 list | grep pulp

Sure

pulp-certguard (1.1.0)

pulp-container (2.2.1)

pulp-deb (2.8.0)

pulp-file (1.5.0)

pulp-rpm (3.9.0)

pulpcore (3.9.1)Can I see some information about your sync from the dynflow console? I’m wondering if we can see at what point specifically it OOM’d. Any information from the expanded action in dynflow would be great. It looks like it was a pulpcore worker that was taking up the memory as ‘rq’.

Also, can I see your createrepo_c versions please?

rpm -qa | grep createrepo_c

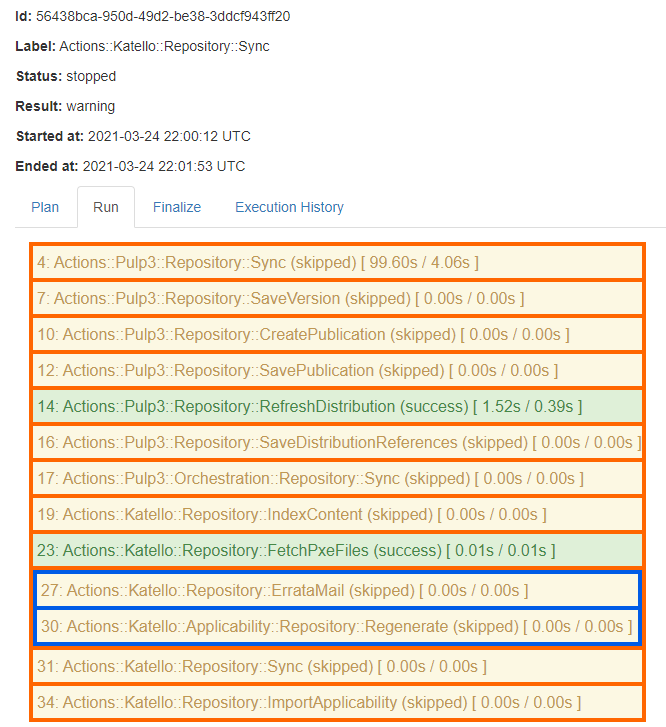

So, it’s dying in action 4 below:

---

pulp_tasks:

- pulp_href: "/pulp/api/v3/tasks/b3d232dd-9617-4bbb-b0ca-a22d1e8ef695/"

pulp_created: '2021-03-24T22:00:13.867+00:00'

state: failed

name: pulp_rpm.app.tasks.synchronizing.synchronize

logging_cid: da6daf5ef4c04911bc137aa769846b77

started_at: '2021-03-24T22:00:14.019+00:00'

finished_at: '2021-03-24T22:01:40.910+00:00'

error:

traceback: ''

description: None

worker: "/pulp/api/v3/workers/db5703bf-2b17-4ac9-9c6b-33d2b2d28bc6/"

child_tasks: []

progress_reports:

- message: Downloading Metadata Files

code: downloading.metadata

state: running

done: 5

- message: Downloading Artifacts

code: downloading.artifacts

state: running

done: 0

- message: Associating Content

code: associating.content

state: running

done: 3999

- message: Un-Associating Content

code: unassociating.content

state: running

done: 0

- message: Parsed Comps

code: parsing.comps

state: completed

total: 96

done: 96

- message: Parsed Advisories

code: parsing.advisories

state: completed

total: 4914

done: 4914

- message: Parsed Packages

code: parsing.packages

state: running

total: 22179

done: 0

created_resources:

- ''

reserved_resources_record:

- "/pulp/api/v3/repositories/rpm/rpm/e3989c09-ead9-4b08-8173-356bacb6c306/"

- "/pulp/api/v3/remotes/rpm/rpm/08aab7ad-6ca9-48a1-b6b0-8e72d0bdb98b/"

create_version: true

task_groups: []

poll_attempts:

total: 26

failed: 1

Also, can I see your

createrepo_cversions please?

$ rpm -qa | grep createrepo_c

python3-createrepo_c-0.17.1-1.el8.x86_64

createrepo_c-libs-0.17.1-1.el8.x86_64

Out of interest I threw extra RAM at the server, and the intial repo sync did complete, ensuring only one Pulp working was working at the time. The worker topped out at around 9.8GiB of RAM usage.

I’m having the same issue, though I’m on Katello 3.18.2 and Foreman 2.3.3 and can’t throw more memory at the issue just yet. Trying to sync RHEL7 base repo and even with 8GB of free memory and no other sync running, it will run out of memory and kill the process as John stated, failing to sync.

20gig is a bit low for hat. I have OL7/OL8 and some custom repos around. My Katello/Foreman VM require around 32gig of RAM to survive for at least a few days, then it goes OOM and services needs to be restarted. Give it more memory and let foreman restart every 3-4 days.

Synchronising a repository shouldn’t take almost 10GiB of RAM, no service should need frequent restarts to keep it from running out of memory due to leaks…

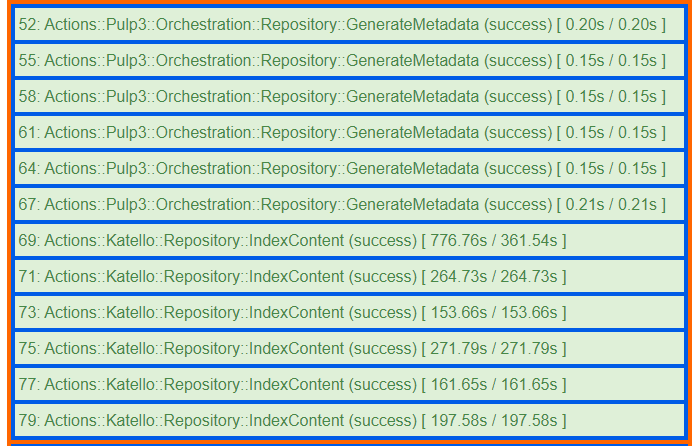

Publishing the CV containing this large repository is also having issues, with the publish pausing part-way through with a proxy error:

[Mon Mar 29 07:53:44.461278 2021] [proxy_http:error] [pid 547921] (20014)Internal error (specific information not available): [client 10.12.34.226:41282] AH01102: error reading status line from remote server httpd-UDS:0

[Mon Mar 29 07:53:44.461370 2021] [proxy:error] [pid 547921] [client 10.12.34.226:41282] AH00898: Error reading from remote server returned by /pulp/api/v3/content/rpm/packages/

[Mon Mar 29 08:18:22.757667 2021] [proxy_http:error] [pid 1301] (20014)Internal error (specific information not available): [client 10.12.34.226:60306] AH01102: error reading status line from remote server httpd-UDS:0

[Mon Mar 29 08:18:22.768418 2021] [proxy:error] [pid 1301] [client 10.12.34.226:60306] AH00898: Error reading from remote server returned by /pulp/api/v3/content/rpm/packages/

[Mon Mar 29 08:22:30.693893 2021] [proxy_http:error] [pid 1297] (20014)Internal error (specific information not available): [client 10.12.34.226:60392] AH01102: error reading status line from remote server httpd-UDS:0

[Mon Mar 29 08:22:30.693964 2021] [proxy:error] [pid 1297] [client 10.12.34.226:60392] AH00898: Error reading from remote server returned by /pulp/api/v3/content/rpm/packages/

10.12.34.226 - - [29/Mar/2021:07:52:51 +0000] "GET /pulp/api/v3/content/rpm/packages/?arch__ne=src&fields=pulp_href&limit=2000&offset=0&repository_version=%2Fpulp%2Fapi%2Fv3%2Frepositories%2Frpm%2Frpm%2Fe3989c09-ead9-4b08-8173-356bacb6c306%2Fversions%2F1%2F HTTP/1.1" 502 341 "-" "OpenAPI-Generator/3.8.0/ruby"The /pulp/api/v3/content/rpm/packages/ call is timing out at the 600s Apache reverse proxy timeout period, I’m going to try upping that period.

@John_Beranek I tested it myself on a small test system (2 cores, ~15GB RAM, puppetserver disabled  ) and I noticed

) and I noticed rq maxing out at 8.2 GB. I’ve created a Pulp issue (Issue #8467: RQ worker takes up a lot of RAM when syncing OL7 repository - RPM Support - Pulp) so it can get some investigation.