Webpack Build

This RFC aims to address issues that developers have raised with the current webpack workflow. There have been a number of proposed solutions and issues raised. From my understanding, the following are a set of proposals on how we can modify the process to reduce time spent debugging issues and increase UI developer efficiency given the fast changing webpack world. The goal of this RFC is to first propose changes that have been discussed, to collect data and feedback from resulting conversations, converge on an agreed upon set of solutions to implement and implement those solutions.

Caveat: It is highly likely I have missed some nuances, proposals and issues that developers have raised regarding webpack. Please throw them into the discussion and I keep the RFC up to date as changes come in through discussion.

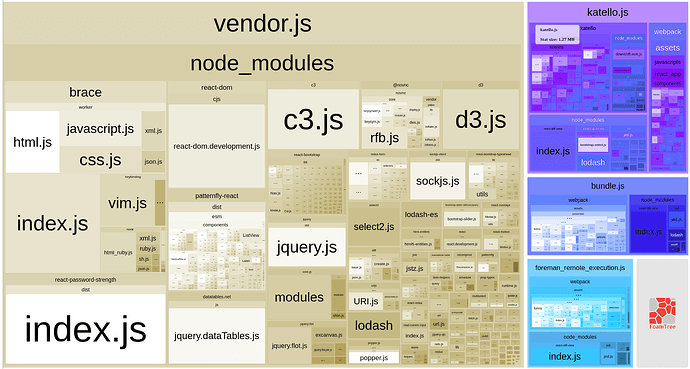

Node Module Packaging Proposals

Option 1: Node modules tarball as a Foreman source

This option would create a new node_modules tarball every time a new Foreman source is created (e.g. as part of test_develop or a specific release). This node_module tarball would be treated similar to foreman source itself, included in the RPM source. There are 1-2 node modules that have binary components and will still have to be packaged independently. Webpack plugins will need to provide their own node_modules tarball alongside gem source.

Pros

- Drops nearly all NPM packages

- Given Foreman source will always contain latest and greatest node_modules per package.json

Cons

- The node_modules tar is ~50MB

- Storage of node_modules tar will require enough space on:

- Koji

- Jenkins

- SRPM will be ~50-60MB each

Option 2: Node modules archive RPM

This option would extract node_modules to their own RPM and serve as an archive that is strategically updated based upon changes to package.json. This would drop the packaging of node modules to 1-3 packages (accounting for those with binaries) and could be updated via direct npm install sourcing. Plugins would either package the few additional required modules or create their own node_module RPM.

Pros

- Drops nearly all NPM individual packaging

- Allows for node_modules to be in lockstep with core

Cons

- Storage is a concern but depends on how often the node_modules RPM is updated

- Requires rebuild process whenever package.json gets updated

- Plugins still need a viable solution

Webpack Compilation Proposal

Today, the Foreman RPM runs webpack compilation based upon available sources and provides a macro for plugins. This means that it’s not as easy to replicate what the RPM build process is doing locally for tracking down issues. A proposal is to move webpack compilation out of the RPM/Deb steps and into a tool such as a script or Docker container that can easily replicate the compilation environment for developers.

This change would mean that we now compile webpack outside of the RPM and Deb build environment, commit those compiled aritfacts to Foreman and plugin sources and include it into the RPM. This does not cancel out the need for the the packaging change proposals. If we compile webpack outside of the RPM/Deb environment, we still need to at a minimum provide the set of node modules used to compile as sources (e.g. including them in the Foreman SRPM). If compilation occurs outside of RPM/Deb environments, we would avoid having to package the binary node modules (e.g. sass).