As you may have heard, there are some changes coming soon to Dynflow.

Current state

In production deployments, there currently is the foreman-tasks/dynflowd service. This service represents an executor. The executor consists of two major logical parts, the orchestrator and worker. The worker is in fact a collection of worker threads, but let’s consider them as a group to keep things simple.

Current issues

There were three major issues with this approach: memory hogging, scaling and issues with executor utilization.

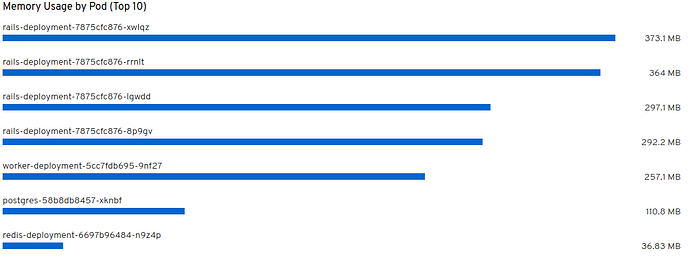

Memory hogging

The executor slowly consumed more and more memory and the only way to reclaim it was to restart the entire process. However, the orchestrator part keeps quite a lot of state and restarting it meant losing it which caused issues.

Scaling

The issue with scaling was we could not scale the workers independently on the rest. We could (and did) spawn more worker threads in an executor or spawn more executors in their entirety, but there was a catch. Spawning more threads is only useful until a certain point, where GIL kicks in and prevents threads from actually being useful.

Executor utilization

A job usually consist of several smaller execution items, called steps. When the executor accepted a job it always had to process it completely, there was no way it could share the steps with other executor’s workers. This could lead to situations where one executor would be heavily loaded with tasks, while another would be mostly unused.

Coming changes

These issues were hard to address without making some major changes. We decided to let go of some of Dynflow’s responsibilities and make it more focused on the orchestration part, while leveraging another project for the “raw” background processing. The original idea was to use ActiveJob as an interface and to let users decide which backend they want to use. After initial testing we decided to be more opinionated and to use Sidekiq directly, which promised better performance, if Sidekiq-specific API is used.

To address the previously described issues, we decided to break apart the executor. What used to be an executor gets split into three components. The first one is Redis, which is used for communication between the other parts, orchestrator and the workers.

The orchestrator’s role is to decide what should be run and when. Because of its stateful nature, there has to be exactly one instance running at time. As mentioned earlier, it is backed by Sidekiq and listens for events on the dynflow_orchestrator queue. In the end the orchestrator shouldn’t need to load the entire rails environment, which should in turn make it thin and allow fast startup.

The other functional piece is the worker. A worker in this case means a single Sidekiq process with many threads. Worker’s role is to actually perform the jobs and it should keep only the bare minimum of state. The workers can be scaled horizontally by increasing the number of threads per process or spawning more processes. We will also be able to dedicate a worker process to a certain queue, meaning we will be able to ensure priority tasks won’t get blocked by others.

To limit the impact of these changes onto already existing deployments, the new changes live side by side with the old model and can be switched. This allows us to keep using Dynflow “the old way” where needed, for example when using Dynflow on Smart Proxy for remote execution and ansible.

This is still a work in progress, but we’re hoping to get this out soon.

TL; DR:

The current problems

- memory hogging

- scaling

- executor utilization

The solution

- split the executor into an orchestrator and a worker

- instead of dynflowd service there will be redis, dynflow-orchestrator and (possibly several) dynflow-workers

The good parts

- we’ll be able to scale workers independently

- we’ll be able to restart workers without having to worry

- we’ll be able to dedicate workers to certain queues (say host updates, rex…)

To be done

- deployment related changes (packaging, installer)

- some tasks don’t fit into the new model well (looking at you LOCE/EQM)

Side note

Currently we use Dynflow as an ActiveJob backend. We don’t have plans to change that in the near future.